AI

[INTERSPEECH 2025 Series #9] Fairness in Dysarthric Speech Synthesis: Understanding Intrinsic Bias in Dysarthric Speech Cloning using F5-TTS

|

Interspeech is one of the premier international conferences dedicated to advancing and disseminating research in the field of speech science and technology. It serves as a global platform where researchers, engineers, and industry professionals can share cutting-edge innovations, methodologies, and applications related to speech communication. In this blog series, we are introducing some of our research papers at INTERSPEECH 2025 and here is a list of them. #5. SPCODEC: Split and Prediction for Neural Speech Codec (Samsung R&D Institute China-Beijing) #9. Fairness in Dysarthric Speech Synthesis: Understanding Intrinsic Bias in Dysarthric Speech Cloning using F5-TTS (Samsung R&D Institute India-Bangalore) |

Introduction

Voice cloning, especially zero-shot speech synthesis, has become one of the most exciting frontiers in speech technology. With just a few seconds of audio, modern voice cloning models can generate speech that mimics a person’s voice tone, rhythm, and style [1]. This is opening up incredible possibilities: Digital avatars with real human-like voices; Custom TTS for accessibility or entertainment; Rapid data augmentation for low-resource languages. But here’s a catch: is it fair while data augmentation? In the context of zero-shot speech synthesis, fairness means that the model: Reproduces voices equitably across diverse speaker types; Preserves key voice characteristics such as intelligibility, identity, and prosody; Avoids biases that disproportionately affect certain groups. Bias in cloned speech can lead to: Misrepresentation of certain group of speaker’s voice; Unequal performance in downstream tasks like ASR or Speaker verification. Do these systems perform equally well across different speaker traits like accent, gender, age, or vocal condition?

In this paper, we studied the Fairness of zero-shot speech synthesis models in the context of dysarthric speech cloning, where the models have not seen any dysarthric speech in their training. Dysarthria, a motor speech disorder caused by neurological conditions, results in a speech that is often slurred, slow, or difficult to understand, particularly in its more severe forms. Speech technologies like ASR and speech enhancement systems, can improve their lives by enabling them to communicate better. However, dysarthric speech poses significant challenges in developing assistive technologies, primarily due to the limited availability of data. Recent advances in neural speech synthesis, especially zero-shot voice cloning, facilitate synthetic speech generation for data augmentation; however, they may introduce biases towards dysarthric speech.

Despite the utilization of zero-shot TTS systems for augmenting dysarthric speech (or for any other speech technology), several critical questions remain unanswered regarding the effectiveness and fairness of dysarthric speech cloning, like: Can synthetic dysarthric speech maintain intelligibility? How does it impact ASR performance? How well the cloned speech retains speaker identity in replicating different severity levels of dysarthria? Do speech synthesis models exhibit bias across dysarthric severity levels in ways that could be disadvantageous to certain user groups?

Our contributions

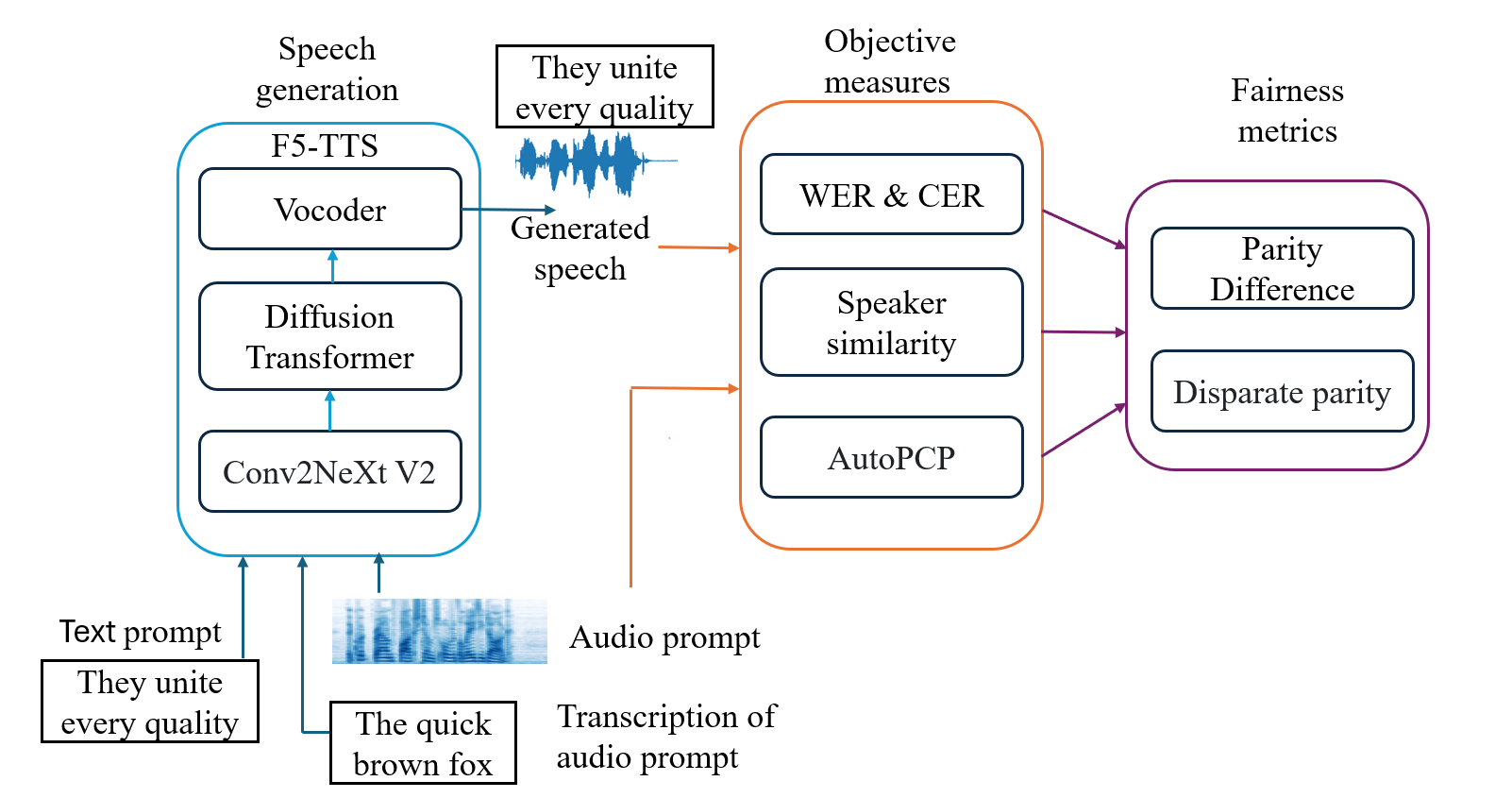

The proposed framework follows a systematic approach, as illustrated in Figure 1. In this study, the F5-TTS [1] is a zero-shot, non-autoregressive TTS system is used to generate synthetic speech for speakers in the TORGO database. The F5-TTS model synthesizes speech using a few seconds of an audio prompt (reference speech sample), its corresponding transcription, and a text prompt for synthetic speech generation. To objectively assess how well the generated speech preserves different levels of dysarthric severity in terms of intelligibility, speaker, and prosodic similarity, we evaluate it using the following criteria:

The intelligibility of the generated samples is assessed using both WER and CER. The addition of CER offers a detailed analysis by focusing on errors at the character level, which is particularly useful for capturing subtle speech distortions such as mispronunciations often present in speech. In this study, the Hubert-large2 ASR model [2] fine-tuned on 960 hrs of Librispeech is used to measure WER and CER. Speaker similarity (SIM-o) is computed as the cosine similarity between the speaker embeddings of the reference audio prompt and the generated speech. To obtain SIM-o, we utilize a speaker verification model3 based on WavLM-large [3]. Evaluating prosody similarity is crucial to ensure that the generated speech preserves the prosody of the original audio prompt. This is calculated by comparing the audio prompt with the generated speech using AutoPCP_multilingual_v2 model [4].

Figure 1. Framework proposed for assessing fairness in dysarthric speech generation using F5-TTS model.

Finally, we analysed the fairness of ZS-TTS model across different severity categories using two different metrics. Parity Difference (PD): It refers to the extent of similarity or differences in the objective measures between healthy and various severity categories. PD value of 0 indicates that the categories are treated similarly. Disparate Impact (DI): The Disparate Impact, or relative disparity, is the ratio calculated for each of the objective measures pertaining to healthy and various severity categories. DI value of 1 indicates no bias between the groups.

differences in the objective measures between healthy and various severity categories. PD value of 0 indicates that the categories are treated similarly. Disparate Impact (DI): The Disparate Impact, or relative disparity, is the ratio calculated for each of the objective measures pertaining to healthy and various severity categories. DI value of 1 indicates no bias between the groups.

Experimental Results

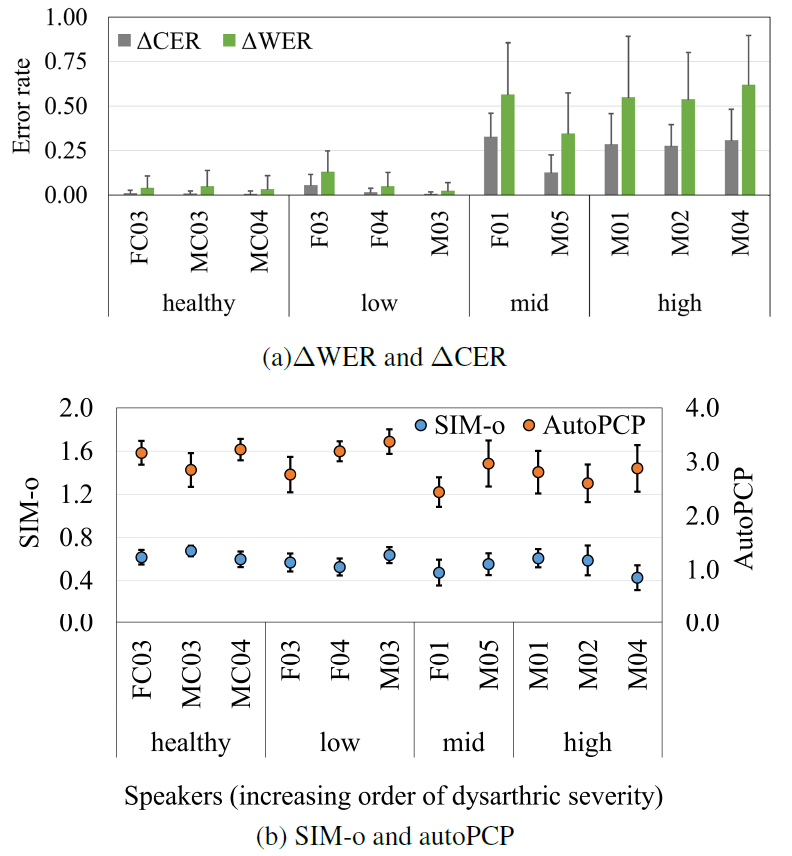

To assess how well the generated speech preserves dysarthric severity, different objective measures are computed between the reference audios from TORGO dataset and generated audios using F5-TTS, and the results are presented in Figure 2.

Figure 2. Objective assessment of zero-shot synthetic dysarthric speech using F5-TTS.

Analysis of ΔWER and ΔCER in Dysarthric Speech

From Figure 2.a., it is observed that the ΔWER and ΔCER are consistently higher in mid and high severity categories compared to the healthy and low severity category. The main reason for this bias might stem from the limitations of the zero-shot voice-cloned sample in accurately representing the speech intelligibility of mid and high severity categories. This results in a lower WER or CER in the generated audio compared to the reference.

In Figure 2(b), the SIM-o and AutoPCP metrics assess speaker similarity and prosody preservation. The SIM-o values remain relatively stable across different severity levels, indicating that the synthesized speech maintains speaker identity. However, the AutoPCP scores show a decreasing trend for more severe dysarthric speakers, implying that the prosody of synthesized speech deviates from the original as dysarthric severity increases. Overall, these results suggest that while the zero-shot TTS system can reasonably maintain speaker characteristics, it struggles to fully preserve the intelligibility and prosody of more severe dysarthric speech.

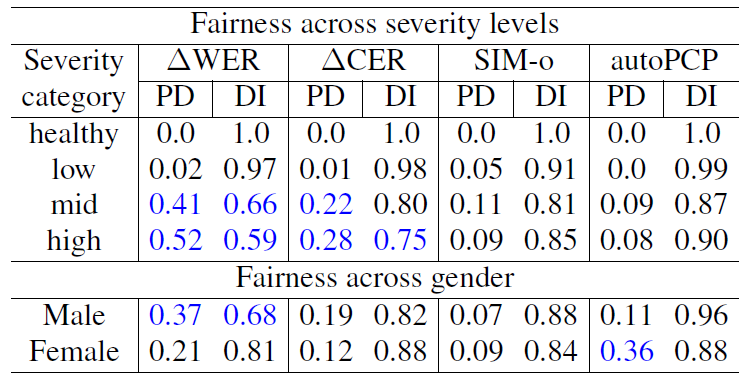

Fairness Analysis Across Severity Levels and Gender

In addition to our previous findings on the presence of biases in cloned dysarthric speech, this study employs fairness metrics to systematically analyze their impact across varying severity levels and gender categories, and the results are reported in Table 1. In general, speakers in the low severity category exhibit relatively clear speech patterns with only slight articulation difficulties, making their speech closely resemble that of healthy speakers. This is also reflected in the generated speech. As a result, minimal to no bias is observed in both PD and DI across all objective measures.

Apart from this, minimal bias is also observed in both the mid and high categories in retaining speaker and prosodic similarity, with PD remaining closer to 0, and DI staying closer to 1 in both SIM-o and AutoPCP. Conversely, high disparities are observed in ΔWER for both mid and high severity categories, where DI is less than 0.66 and PD is greater than 0.41. In contrast, for the high severity category, while using ΔCER, which focuses on character-level articulation errors, a reduction of 27% in DI and 85.71% in PD is observed compared to ΔWER. However, ΔCER still shows bias in the high severity category, with a DI of 0.75.

Further, the fairness analysis across gender reveals a notable disparity, with male speakers exhibiting higher bias, particularly in intelligibility (ΔWER), while female speakers show higher bias in prosody (PD=0.36).

Table 1. : Fairness analysis across dysarthric severity and gender categories.

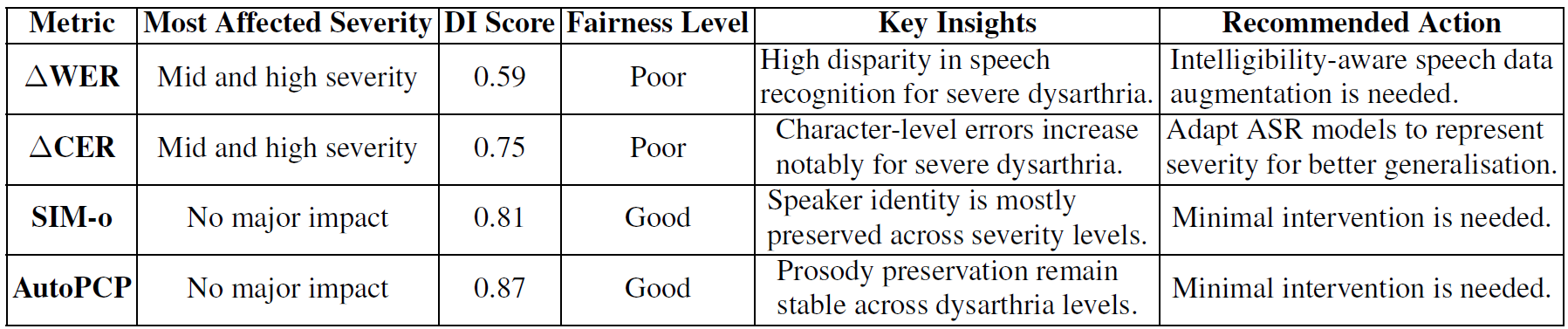

Observations based on Fairness Study: Based on the fairness analysis and the observations from downstream tasks, the key insights are presented in Table 2. As discussed in the above sections, the results confirm that speech intelligibility (in terms of WER and CER) is the most biased aspect of dysarthric speech synthesis, particularly for severe cases. Unlike intelligibility, speaker and prosody similarity remain relatively unbiased, with DI above 0.81. These findings emphasize the need for fairness-aware data augmentation when using zero-shot voice cloning systems to generate synthetic speech for severe dysarthric speakers.

Table 2. Key insights based on the fairness study. (Based on minimum DI score of each objective measure.)

Conclusion

In this paper, we presented a framework for evaluating biases in synthetic speech generated by F5-TTS for dysarthric speakers. Using fairness metrics, our analysis provides valuable insights into how dysarthric severity affects the preservation of intelligibility, speaker identity, and prosody in voice-cloned samples. While speaker similarity and prosodic information are retained across all severity levels, intelligibility is compromised in high severity cases with PD of 0.52 and DI of 0.59. This in turn affects the representation of higher dysarthric severities in data augmentation. Moreover, fine-tuning ASR models with such augmented data leads to performance degradation, particularly for individuals with more severe dysarthria. These findings strongly suggest the critical need for fairness-aware approaches in speech augmentation.

Link to the paper

References

[1] Y. Chen, Z. Niu, Z. Ma, K. Deng, C. Wang, J. Zhao, K. Yu, and X. Chen, “F5-TTS: A fairytaler that fakes fluent and faithful speech with flow matching,”arXiv preprint arXiv:2410.06885, 2024.

[2] W.N. Hsu, B. Bolte, Y. H. H. Tsai, K. Lakhotia, R. Salakhutdinov, and A. Mohamed, “Hubert: Self-supervised speech representation learning by masked prediction of hidden units,” IEEE/ACM transactions on audio, speech, and language processing, vol. 29, pp. 3451–3460, 2021.

[3] S. Chen, C. Wang, Z. Chen, Y. Wu, S. Liu, Z. Chen, J. Li, N. Kanda, T. Yoshioka, X. Xiao et al., Wavlm: Large-scale self supervised pre-training for full stack speech processing,” IEEE Journal of Selected Topics in Signal Processing, vol. 16, pp. 1505–1518, 2022.

[4] L. Barrault, Y. A. Chung, M. C. Meglioli, D. Dale, N. Dong, M. Duppenthaler, P.-A. Duquenne, B. Ellis, H. Elsahar, J. Haaheim et al., “Seamless: Multilingual expressive and streaming speech translation,” arXiv preprint arXiv:2312.05187, 2023.

[5] Z. Jin, X. Xie, T. Wang, M. Geng, J. Deng, G. Li, S. Hu, and X. Liu, “Towards automatic data augmentation for disordered speech recognition,” in Proc. ICASSP. IEEE, 2024, pp. 10626–10630.