AI

Mobile Twin Recognition

Introduction

Almost all of us has one or more smartphones, where we keep our personal data such as credit card information, photos, business contacts and so on. It is therefore important to protect this valuable information from unauthorized access.

Modern smartphones have a face recognition technology, which protects personal data. One of the most advanced problems in facial recognition is twin differentiation. In recent years, an increasing number of mobile phones have been hacked using the face of the phone owner’s sibling/twin (see Figure 1). Hundreds of video demonstrations are available on the internet.

Scientists from Notre Dame University decided to test how well people can differentiate identical twins and which facial traits are the most discriminative [1]. An experiment was done with 25 volunteers from Notre Dame University trying to differentiate 180 pairs of identical twins: 90 related to “match” (same person), while another 90 related to “non-match” (identical twin) pairs. Researchers used a dataset collected at the Twins Days Festival in Twinsburg, Ohio in August 2009 [2].

Figure 1. Examples of identical twin faces

In the first experiment stage participants were asked to classify each pair in 2 seconds, for which classification accuracy varied from 60.56% to 90.56% (78.82% in average). In the second experiment stage participants were given unlimited time to make a choice. Among 25 participants classification accuracy ranged between 78% and 100% (92.88% in average).

The results showed that the identical twins differentiation task is challenging even for humans, but our goal is to train a computer vision system to recognize identical twins with comparable to humans performance.

Another problem is the fact, that mobile secure systems which process personal data are very limited in computational resources.

Approach

In this work we propose a neural network architecture which extracts textural face information, combines it with face asymmetry data and calculates a final matching similarity score, which shows whether two proposed identities are the same or not.

Asymmetry is a unique characteristic of each human face. If you split your face using a vertical line, positioned centrally between eyes and used a mirror to see the left and right side, then you will see two different faces (see Figure 2).

Figure 2. An example of mirroring one side of the face. Generated faces of the same identity look different, which emphasizes the utility of face asymmetry.

We used the following features to calculate the asymmetry of faces: histogram distance (see Figure 3a), kurtosis (the feature which describes the “tailedness” of a probability distribution) and block distance (see Figure 3b).

Figure 3a. Scheme of histogram distance calculation

Figure 3b. Scheme of block distance calculation

Our method uses dlib [3] as a face detector and landmark extractor to prepare face regions for the alignment, preprocessing and matching stages.

An affine transformation was performed so that the straight line connecting eye centers was horizontal and eyes were symmetric. Images were then cropped and rescaled to 194x194, so that the eye centers of different faces were in the same locations.

To provide robustness with complicated light conditions (over-illumination, dark images, side light and etc.), we decided to use self-quotient image preprocessing (SQI) [4] to examine face texture details (see Figure 4). This technique helps to remove color information and extract illumination invariant features, which help to improve proposed face recognition algorithms.

Figure 4. An example of light normalization using SQI technique [4].

We used the ND-TWINS-2009-2010 database [5] for training and testing: 24 349 face stills from 492 twin subjects (372 pairs). We analyzed images of frontal poses, in different illumination conditions and various emotions.

Figure 5. Twin recognition integration into the main face recognition module

Figure 5 shows the scheme of twin recognition integration into the main face recognition module. It has a significant advantage: twin recognition solution would affect only false rejection rate value of the main face recognition module.

Results and insights

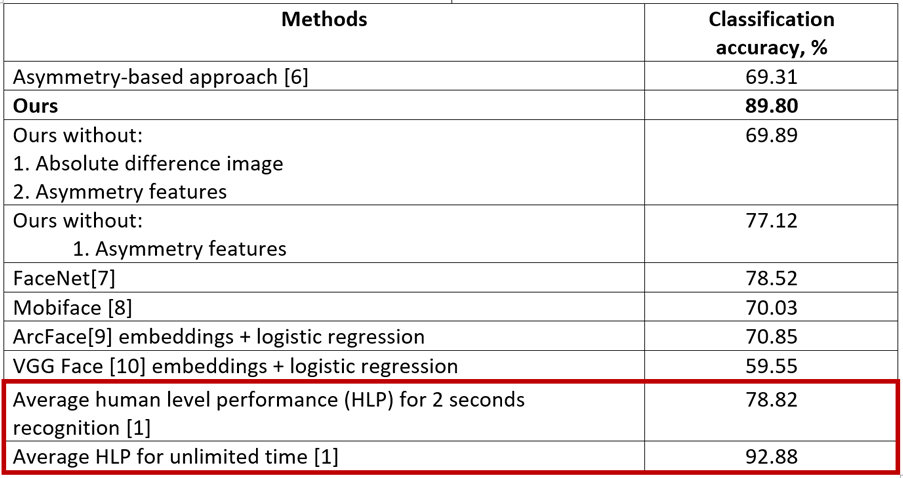

We compared our solution with many existing approaches used in deep face recognition and twin differentiation in particular. Table 1 shows a comparison of the proposed twin recognition method with conventional approaches, using the proposed test set.

Human level performance results [1] are included in table, but presented as a reference (not for comparison), because the authors of [1] did not provide information about the test set used. Human level performance values are represented in terms of accuracy, therefore we decided to provide accuracy values to give rough estimation, how close proposed algorithm can approach to human decisions.

Table 1. Performance of different face recognition approaches using ND-TWINS-2009-2010 test set [5].

Our approach shows the best performance, when we combine CNN-based and asymmetry-based methods. If we remove the asymmetrical algorithm part, then recognition performance significantly degrades. Also, as shown in Table 2, the proposed approach has only 150 000 parameters and could easily be used in any low performance device.

Table 2. Model size comparison of different face recognition CNN models.

Our system restrictions were as follows:

• Mobile device equipped with Qualcomm Snapdragon 835 CPU.

• Only single core available.

• < 3MB memory available to store model parameters.

• Matching execution time <10ms.

Proposed solution perfectly fit to system limitations: matching execution time equals to ~7ms, the algorithm took 2.7 MB of disk space.

Conclusion and future works

We investigated the performance of hand-crafted asymmetry features and CNN-based features combination which provides proper twin recognition results for the frontal faces, close to human level performance.

The proposed solution has optimized CNN architecture suitable for low power devices and embedded systems, providing a significant biometric security improvement.

In future work we plan to expand the algorithm capabilities to handle various face poses. Also, obtaining twins data is a huge issue, because there is a few number of available datasets and they were captured with significant restrictions (fixed face poses, a few number of emotions). So full testing on general face datasets is not applicable for twins differentiation problem. In this case it is reasonable to think about modern face generation techniques usage, which could help to generate twin face pairs with specific features and traits.

Publication

Our paper published in 2020 IEEE International Joint Conference on Biometrics (IJCB).

Link to the paper

https://doi.org/10.1109/IJCB48548.2020.9304934

References

[1] S. Biswas, K. W. Bowyer and P. J. Flynn, "A study of face recognition of identical twins by humans", 2011 IEEE International Workshop on Information Forensics and Security, Iguacu Falls, 2011, pp. 1-6.

[2] "Twins days festival website," http://www.twinsdays.org/.

[3] “Dlib”, http://dlib.net/.

[4] T. H. N. Le, T. Hoang Ngan, et al., "Facial aging and asymmetry decomposition based approaches to identification of twins", Pattern Recognition, 2015, pp. 3843-3856.

[5] University of Notre Dame, nd-twins-2009-2010 database, http://cse.nd.edu/cvrl/CVRL/Data_Sets.html.

[6] F. Juefei-Xu and M. Savvides, "An Augmented Linear Discriminant Analysis Approach for Identifying Identical Twins with the Aid of Facial Asymmetry Features", 2013 IEEE Conference on Computer Vision and Pattern Recognition Workshops, Portland, OR, 2013, pp. 56-63.

[7] F. Schroff, D. Kalenichenko and J. Philbin, "FaceNet: A unified embedding for face recognition and clustering," 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, 2015, pp. 815-823.

[8] C. N. Duong, K. G. Quach, N. Le, N. Nguyen, K. Luu, "Mobiface: A lightweight deep learning face recognition on mobile devices", arXiv:1811.11080, 2018

[9] J. Deng, J. Guo, N. Xue, S. Zafeririou, “ArcFace: Additive Angular Margin Loss for Deep Face Recognition”, arXiv:1801.07698, 2018

[10] O. Parkhi, A. Vedaldi, A. Zisserman, "Deep face recognition",British Machine Vision Conference, 2015