AI

Augmenting Perceptual Super-Resolution via Image Quality Predictors

|

The Conference on Computer Vision and Pattern Recognition (CVPR) is one of the most prestigious annual conferences in the field of computer vision and pattern recognition. It serves as a premier platform for researchers and practitioners to present cutting-edge advancements across a broad spectrum of topics, including object recognition, image segmentation, motion estimation, 3D reconstruction, and deep learning. As a cornerstone of the computer vision community, CVPR fosters innovation and collaboration, driving the field forward with groundbreaking research. In this blog series, we delve into the latest research contributions featured in CVPR 2025, offering insights into groundbreaking studies that are shaping the future of computer vision. Each post will explore key findings, methodologies, and implications of selected papers, providing readers with a comprehensive understanding of the innovations driving the field forward. Stay tuned as we uncover the transformative potential of these advancements in our series. Here is a list of them. #2. Augmenting Perceptual Super-Resolution via Image Quality Predictors (AI Center - Toronto) #3. VladVA: Discriminative Fine-tuning of LVLMs (AI Center - Cambridge) #4. Accurate Scene Text Recognition with Efficient Model Scaling and Cloze Self-Distillation (Samsung R&D Institute United Kingdom) #5. Edge-SD-SR: Low Latency and Parameter Efficient On-device Super-Resolution with Stable Diffusion via Bidirectional Conditioning (AI Center - Cambridge) #6. DreamCache: Finetuning-Free Lightweight Personalized Image Generation via Feature Caching (Samsung R&D Institute United Kingdom) |

1. Introduction

We are excited to share our research in improving perceptual quality of super-resolved images. Digital Super-Resolution (SR) is an important task arising in a variety of applications, such as smartphone camera zoom and TV display. In simple terms, the goal of SR is a meaningful increase in the resolution (number of pixels) of an image, by adding details that appear natural and improve visual quality. Since SR images are usually produced to improve the user experience, we believe incorporating human preferences in SR is crucial. In this blog post, we discuss various strategies to achieve this stated goal, using automated models of human image quality judgments, instead of direct feedback from humans. We find this approach is not only more scalable for training SR models, but it also enables novel algorithmic techniques that improve SR outputs.

SR, a classical inverse problem in computer vision, is inherently ill-posed, inducing a distribution of plausible solutions for every input [1]. Imagine increasing the resolution of a photograph of a faraway grassy hill – many possible patterns of grass could have induced the resulting blurry green image. Thus, the fundamental challenge of SR is to find a perceptually plausible sample from a distribution of high-resolution (HR) images that could have given rise to the associated low resolution (LR) image. Early models (e.g. [2, 3]), trained with pixel-wise losses, produce ‘average’ and thus blurry HR images with a high peak signal-to-noise ratio (PSNR). However, humans prefer HR images with high perceptual fidelity. A variety of techniques, from perceptual metrics [4] to adversarial losses [5], are employed to this end. The challenge of such methods, however, is to produce perceptually plausible outputs without introducing high-frequency artifacts or straying too far from the true signal [6], a balance studied theoretically under the ‘perception–distortion tradeoff’ [7].

With the motivation of incorporating human feedback in the SR process, in this work, we explore the use of No-Reference Image Quality Assessment (NR-IQA) metrics, which are powerful models designed to emulate human preferences for image quality. Recently, Ding et al. [8] attempt to use various full-reference (FR) IQA metrics for SR model finetuning, and HGGT [9] proposes to use human guidance in the GT selection process. Unlike these works, we attempt to assess the ability of NR-IQA metrics to provide automatic and precise guidance in agreement with humans.

1.1 Our Approach

We begin with a comprehensive analysis of NR-IQA metrics on human-derived SR data, identifying human alignment of different metrics. Then, we explore two methods of applying NR-IQA models to SR learning (see Figure 1): (i) altering data sampling, by building on an existing multi-ground-truth SR framework, and (ii) directly optimizing a differentiable quality score. Our results demonstrate a more human-centric perception-distortion tradeoff, focusing less on non-perceptual pixelwise distortion, and instead improving the balance between perceptual fidelity and human-tuned NR-IQA measures.

1.2 Our contributions

Figure 1. Schematics for improving perceptual super-resolution (SR). Perceptual quality of SR can be improved in two ways: (Left) providing supervision through multiple enhanced ground-truths (EGT) or (Right) direct optimization for the quality of the super-resolved image. In both cases, human-in-the-loop can greatly improve performance. However, manual annotation is tedious, imprecise, and nondifferentiable. An IQA metric can replace a human in rating the enhanced ground-truths or can directly act as a differentiable optimization objective. In this paper, we specifically assess whether more practical no-reference (NR) IQA metrics can replace human raters for SR. We find that combining NR-IQA-based sampling and regularized optimization is sufficient to attain state-of-the-art perceptual image quality, without requiring human ratings.

2. Analysis of NR-IQA metrics

We analyze the alignment between various NR-IQA metrics and human judgements for assessing the aesthetic quality of SR images on two publicly available datasets:

SBS180K [10]: The dataset consists of pairs of SR images generated using different SR models for the same LR images, and scores depicting the fraction of annotators preferring the aesthetic quality of the second image over the first one. Due to the large size of the dataset, we analyze the metrics in two phases. In phase I, we analyze 20 NR-IQA metrics and their variants (total 42 metrics) on a small subset of the train set. We identify the top seven metrics with high alignment with human judgements: PaQ-2-PiQ, NIMA, MUSIQ, LIQE, ARNIQA, QAlign, and TOPIQ-NR. In phase II, we analyze the top seven NR-IQA metrics from phase I on the complete train and test sets. We observe that MUSIQ [11] has relatively higher accuracy on both train and test sets compared to other six metrics.

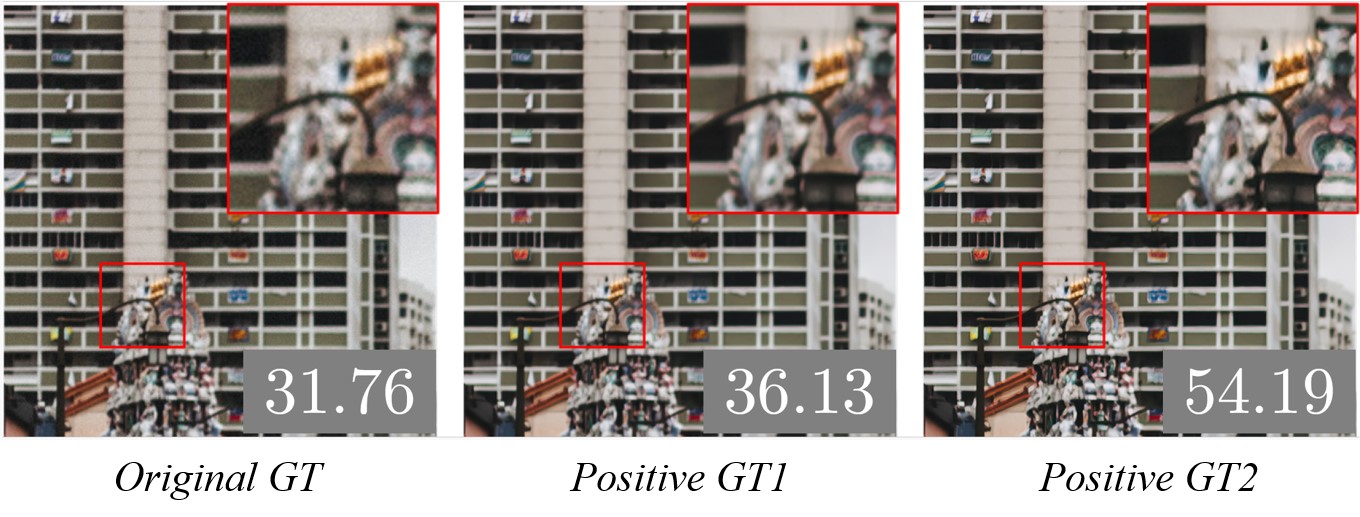

HGGT [9]: The dataset contains quintuplets of an original HR ground-truth (GT) patch and four enhanced GTs with annotations of being better than (‘positive’), similar to (‘similar’), or worse than (‘negative’) the original GT. We analyze the seven selected metrics of phase I above on this dataset. Once again, we find that MUSIQ aligns with human preferences the most among the seven contestants.

Note, while humans can only provide coarse annotations, MUSIQ provides more fine-grained scores in agreement with human judgments (see Figure 2).

Figure 2. MUSIQ can differentiate the quality of two images, both marked as “positive” by human annotators. Higher MUSIQ indicates higher quality (zoom for details).

3. Methods

3.1 Background

HGGT demonstrates the effectiveness of training SR models using multiple GTs per input, with the straightforward approach of sampling uniformly randomly from the positives (we call this strategy ‘UPos’). Then, they train their models using a standard SR loss:

where I ̂ is the predicted SR image, I is the chosen GT, dP is a perceptual loss, and D is an adversarial discriminator.

However, HGGT requires human labels, which are difficult to scale and often domain dependent. In contrast, we explore the opportunities afforded by NR-IQA models, which not only eschew human labels, but also confer additional capabilities – namely, the ability to provide more fine-grained non-uniform sampling weights and to enable direct optimization via differentiability.

3.2 Proposed Approach

3.2.1 Reweighted Sampling

Here, we explore straightforward alternatives to UPos of the following form, with Q being MUSIQㅡan NR-IQA metric:

Softmax-All (SMA). Let SI be a set of all pseudo-GTs. This setting uses no human annotation.

Softmax-Positives (SMP). Let SI be a set of positives only. This setting is close to HGGT, just with non-uniform weights.

Argmax-online (AMO). In above strategies, we first select a full GT image and then extract a training patch from that GT. In contrast, for this strategy, we sample one patch from the same random location in each GT, then run Q on each patch, and choose the best one (i.e., argmax). Since quality is computed at the patch level (which may disagree with quality assessments at the full image level), this enables a more fine-grained judgment compared to human annotations.

3.2.2 Direct Optimization (Fine-Tunning)

Another obvious approach to improving our SR model without human labels is to simply include Q in our objective function. Since NR-IQA models are large neural networks, it is possible for direct optimization to induce artifacts that fool Q into providing a high score, similar to the well-known phenomenon of adversarial attacks. To mitigate such artifacts, we finetune the SR model using LoRA [12] weights (ϕ), which reduce the optimized network capacity. Thus, we include NR-IQA as an additional regularizing loss term, added to the one in HGGT, as follows:

Results

We evaluate proposed approaches on the HGGT Test-100 using two low-level distortion metrics, three mid-level full-reference visual metrics, and four NR-IQA metrics. We include two human-annotation-free baselines that do not incorporate NR-IQA: “OrigsOnly”, which trains only with original (non-enhanced) GT, and “Rand”, which randomly chooses a supervisory image from among all potential GTs. Brief results are shown in Table 1 and Figure 3. Refer to our paper for complete results.

Table 1. Evaluation on the held-out HGGT Test-100. Second column indicates that a method works with no human GT (✓) or requires human GT (✗). * indicates previous SoTA (namely, HGGT [9]).

Neural IQA Sampling Outperforms Human Rankings. In general, we find AMO is consistently superior to both SMA and SMP, which suggests that selecting for quality (especially at the fine-grained level demanded in the online setting) is more important than simply having multiple GTs; AMO is also measurably better than UPos in terms of perceptual quality, despite the lack of access to human annotation.

IQA Fine-tuning Improves Human Sampling. We examine the impact of fine-tuning (FT) on NR-IQA, when used on top of human data (denoted as UPos+FT). Overall, we observe performance boosts with FT, suggesting that useful information can be extracted from neural NR-IQA models, even on top of a model with access to human annotations, providing a simple mechanism for improving image quality in SR models.

Superior Perceptual Quality via Neural Sampling and Fine-Tuning. Finally, we test the natural unification of IQA-based neural sampling and finetuning, denoted by the AMO+FT. We find that this combination surpasses the SoTA HGGT approach (UPos), in terms of perceptual quality, despite not using any human annotations.

Upper-bounding NR-IQA Evaluation Performance. In addition, we compute “gold standard” NR-IQA values for the GT Test data, taking the best score among the available pseudo-GT alternatives. We find that altered sampling generally does not reach these values, but FT is able to reach and even surpass them in several scenarios.

Figure 3. Qualitative Results with corresponding MUSIQ scores.

Conclusions

While prior research in super resolution utilizes human annotations and trades reference fidelity for perceptual quality, we improve perceptual quality without sacrificing fidelity in an annotation-free manner via neural NR-IQA. We hope this enables future investigation into NR-IQA for SR in the context of domain shift, as NR-IQA does not need paired GT.

Link to the paper

https://arxiv.org/abs/2504.18524

References

[1] Simon Baker and Takeo Kanade. Limits on super-resolution and how to break them. IEEE Transactions on Pattern Analysis and Machine Intelligence (PAMI), 2002.

[2] Chao Dong, Chen Change Loy, Kaiming He, and Xiaoou Tang. Learning a deep convolutional network for image super-resolution. In European Conference on Computer Vision (ECCV), 2014.

[3] Chao Dong, Chen Change Loy, and Xiaoou Tang. Accelerating the super-resolution convolutional neural network. In European Conference on Computer Vision (ECCV), 2016.

[4] Justin Johnson, Alexandre Alahi, and Li Fei-Fei. Perceptual losses for real-time style transfer and super-resolution. In European Conference on Computer Vision (ECCV), 2016.

[5] Divya Saxena and Jiannong Cao. Generative adversarial networks (GANs) challenges, solutions, and future directions. ACM Computing Surveys (CSUR), 2021.

[6] Jie Liang, Hui Zeng, and Lei Zhang. Details or artifacts: A locally discriminative learning approach to realistic image super-resolution. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2022.

[7] Yochai Blau and Tomer Michaeli. The perception-distortion tradeoff. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018.

[8] Keyan Ding, Kede Ma, ShiqiWang, and Eero P Simoncelli. Comparison of full-reference image quality models for optimization of image processing systems. International Journal of Computer Vision (IJCV), 2021.

[9] Du Chen, Jie Liang, Xindong Zhang, Ming Liu, Hui Zeng, and Lei Zhang. Human guided ground-truth generation for realistic image super-resolution. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2023.

[10] Xiaoming Li, Wangmeng Zuo, and Chen Change Loy. Learning generative structure prior for blind text image super-resolution. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2023.

[11] Junjie Ke, Qifei Wang, Yilin Wang, Peyman Milanfar, and Feng Yang. MUSIQ: Multi-scale image quality transformer. In International Conference on Computer Vision (ICCV), 2021.

[12] Edward J Hu, Yelong Shen, Phillip Wallis, Zeyuan Allen-Zhu, Yuanzhi Li, Shean Wang, Lu Wang, and Weizhu Chen. LoRA: Low-rank adaptation of large language models. International Conference on Learning Representations (ICLR), 2022.