AI

Video ScaleNet (VSN) – Towards the Next Generation Video Streaming Service

Introduction

There is no doubt that the pandemic has changed our way of communication dramatically and quickly. It pushed us into a virtual world deeper and deeper. Someone could say that the changes were just on their way not something special, but no one couldn’t argue that the phenomenon has happened too radically. How can an edge technology help humanity under these conditions? We think that the most efficient video streaming services powered by AI technology, that was never experienced yet, would be one of solutions. In fact, Samsung Research has been working on innovating the whole media processing pipeline to deliver the realistic media experience to consumers on CE devices with the cutting-edge AI technology which would be well harmonized with our traditional media processing expertise.

Technology

In this blog, a Video ScaleNet (VSN), an image and video scaler based on neural network technology, is introduced. The technology underpinning of VSN is an Autoencoder as shown in figure 1. Autoencoder is a well-known neural network architecture has been widely used in data processing areas like extracting principal feature vectors or reducing dimension of input data through bottlenecks in the center of the network.

Figure 1. Conventional Autoencoder Architecture

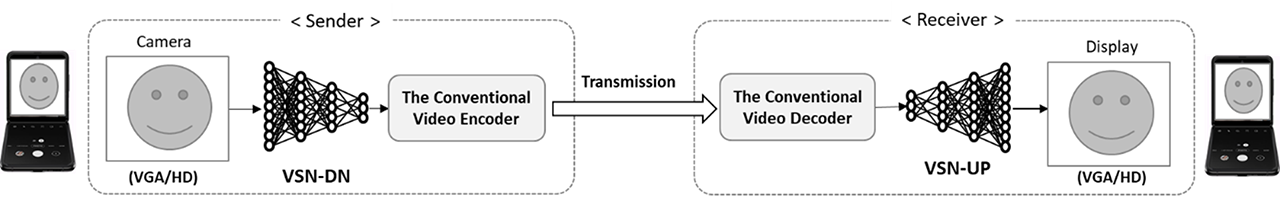

The basic concept of VSN is to replace encoder and decoder part of Autoencoder as AI down-scaler and AI up-scaler which adds a super-resolution algorithm using convolutional neural networks (CNNs) into the video compression pipeline. Recently, there was research showing that joint learning between compact-resolution video and super-resolution video can lead to significant quality improvement [1] [2]. As shown in figure 2, VSN usually consists of two parts. The first part, VSN-DN, is for reducing the video resolution to a smaller size while retaining the primary features of the input video. The second part, VSN-UP, is for recovering pixel values and increasing the resolution to the input video size.

Figure 2. Video ScaleNet (VSN) Architecture

VSN-DN and VSN-UP can be operated in a stand-alone mode as a down-sampler and an up-sampler, respectively. However, since two parts are built on non-linear neural network architecture, it can show significant quality improvement over the conventional image scaler like bicubic or lanczos interpolation. Between VSN-DN and VSN-UP, various video processing algorithms can be deployed. The key technology of VSN is how to train joint networks efficiently and how to design light-weight network for embedded devices. As shown in figure 3, it is found that VSN shows better detailed and sharpen reconstructed images compared to the conventional scaler.

Figure 3. Perceptual Quality Comparison (Left (Bicubic) vs. Right (VSN) @ 1080p→2160p)

Applications

Adaptive streaming which is mentioned at introduction is a technique to deliver multimedia data such as video on demand or live broadcasting from a server to a client over the networks. In the beginning, the most media streaming services relied on a dedicated protocol such as RTP/RTCP. However, since HTTP networks such as the Internet are widely distributed all over the world, the streaming service based on HTTP networks is now becoming a quite popular and natural approach. Among many techniques using HTTP streaming service such as download & play, progressive download, adaptive streaming has become a dominant component of the OTT streaming service for dynamically adjusting the compression level (bitrate) by resizing content's resolution itself. Most OTT services such as Social Network Service or Internet video are adopting adaptive streaming to provide their media streaming services over the Internet. Adaptive streaming usually relies on a packing protocol such as MPEG-DASH or HLS where multiple streams are defined by profiles such as low, medium and high quality.

As shown in figure 4, the left side describes a general adaptive bitrate streaming process where the client is in the case of an 8K streaming. In left side, the conventional down and up scaler is generally selected as signal processing filters such as bicubic. The right side explains in the case when the VSN is applied to the identical system. The legacy down scaler is replaced with VSN-DN and then VSN-UP is post-processed which can lead better visual quality at the end of consumer side.

Figure 4. Video ScaleNet for Adaptive Streaming

It is believed that one of the best applications for VSN is the adaptive bitrate streaming for OTT service since VSN technology outperforms legacy signal filters. In addition, it is experimentally proved that backward compatibility is guaranteed since there is no visual quality degradation even when only one VSN part is harmonized with legacy system.

In addition, VSN can be one of good utilities for video conference as well. As shown in Figure 5, for example, the mobile camera captures 720p (HD) resolution video and then VSN-DN is applied to reduce the resolution lower. By reducing the input resolution of encoder, the network bandwidth of video conference can be also reduced. Finally, the receiver applies VSN-UP to reconstruct video from decoder up to 720p resolution. VSN enables almost identical perceptual visual quality while reducing the required bandwidth approximately as a half.

Figure 5. Video ScaleNet for Video Conference

Conclusion

Today is absolutely the era of Artificial Intelligence. Numerous researchers are making every effort for much better AI technology. However it is still true that there are lots of limitation to directly deliver this wonderful technology to the end users especially through the embedded devices which always belong to us. Samsung Research is also doing its best efforts to make sure all people can enjoy the benefit of AI technology more efficiently and quickly in their lives. Through this, we’d be more happy for VSN to help make people connected each other more seamless and closely before. Finally we hope to just make your day.

Reference

[1] https://youtu.be/g6SWYFEeA64?t=1638, “8K Codec Low-Latency Livestreaming and AI Streaming”, Samsung Developer Conference 2019, Oct. 29-30, San Jose, CA

[2] Yue Li, Dong Liu , Houqiang Li , Li Li , Zhu Li, and Feng Wu, “Learning a Convolutional Neural Network for Image Compact-Resolution”, IEEE TRANS. ON IMAGE PROCESSING, Vol. 28, No. 3, Mar. 2019