Communications

AI-powered Network-level and User-level Optimization: Challenges, Real-World Results, and the Path Forward

|

Samsung Research is preparing for future wireless technologies with convergence research of AI and communications, in which AI-native approaches drive designing and operations of entire RAN (Radio Access Network) and CN (Core Network). In the blog series of Communications-AI Convergence, we are planning to introduce various research topics of this application: #3. Protocol/Networking & Operation #4. Radio Resource Scheduler This blog will cover AI applications to channel estimation, channel prediction, and signal validation, which have significant impacts on spectral efficiency and transmission performance. |

1. Introduction

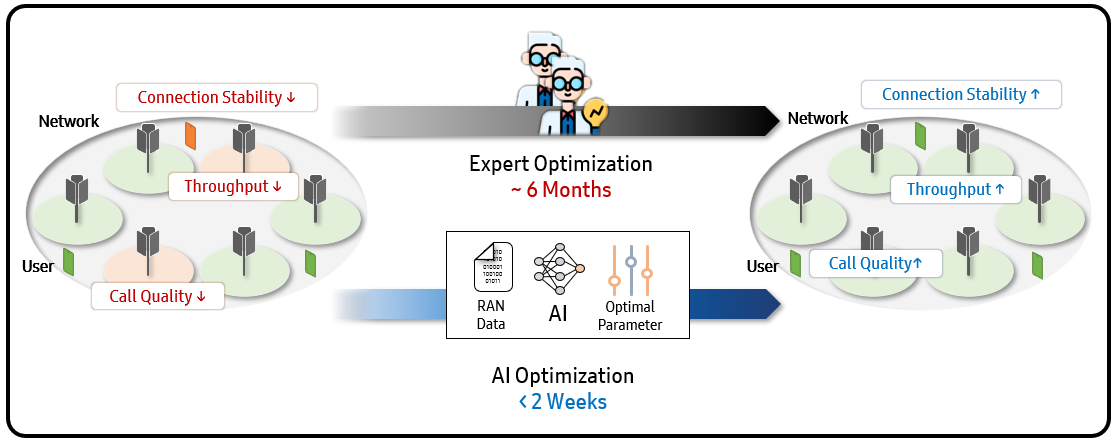

As networks evolve to support growing demand and business environments become more challenging compare to past, the future mobile network depends on building infrastructure that is both high-performing and cost-efficient. Traditionally, network optimization has relied heavily on the expertise of engineers who manually analyze performance data and adjust thousands of RAN control parameters. This process is time-consuming, reactive, and inherently challenging to scale across national or global networks.

AI presents a transformative opportunity: by leveraging data from deployed RAN, AI/ML models can identify patterns, infer network conditions, and automatically recommend optimal configurations. This facilitates faster decision-making, consistent performance tuning, and significant operational cost savings.

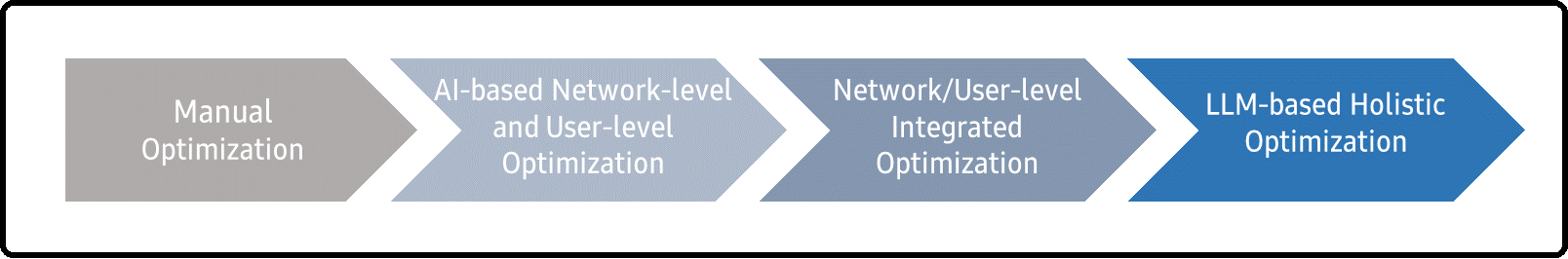

Moreover, AI can assist in optimizing networks at both the network level and the user level. While network-level parameter optimization can enhance overall network performance metrics, such as throughput, but some users may still experience recurring issues-such as connection failures that undermine connection stability-under specific conditions. These challenges can be more effectively addressed by fine-tuning parameters tailored to individual users. As a result, AI can help optimize both network and user performance, as illustrated in Figure 1.

Figure 1. Transition to AI-based Network-level and User-level Optimization

2. AI-based Network Performance Optimization

2-1 Approach and Challenges: From Data to Deployment

Building a reliable AI-based network optimization model and system for RAN comes with challenges

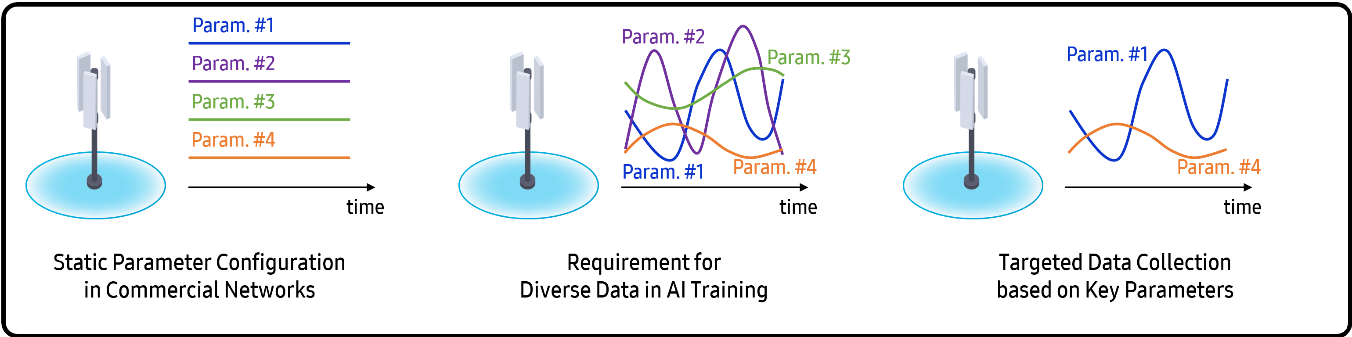

Challenge #1: Lack of Diverse Training Data

Most RAN equipment operates with default or static parameter settings, and changes are typically made only in response to faults or through manual intervention. As a result, commercial networks rarely generate data that reflects a variety of parameter configurations.

To effectively train AI models, it is essential to have data that captures how various parameter configurations interact with different environments. This helps the model learn KPI patterns under diverse conditions. Such data diversity is critical for training models that generalize well and produce reliable results in real-world deployments.

Our Solution: Samsung collaborated with domestic telecom operators to collect targeted data by carefully adjusting parameters in live commercial networks. Since testing all combinations among thousands of parameters is infeasible, we focused on a subset identified through expert knowledge and curated data analysis that significantly impacts key KPIs such as throughput, spectral efficiency, and call drop rate. This approach allowed us to gather high-quality data representing a wide range of meaningful configurations. The approach is depicted in Figure 2.

Figure 2. Challenge and Approach

Challenge #2: Designing the Right AI Architecture

We designed our AI model to emulate the approach taken by human experts in optimizing mobile networks. Experienced experts typically initiate the optimization process by analyzing various performance indicators and environmental conditions to evaluate the current network state. Based on this assessment, they utilize their domain knowledge and operational experience to determine the appropriate parameter configurations and optimization strategy.

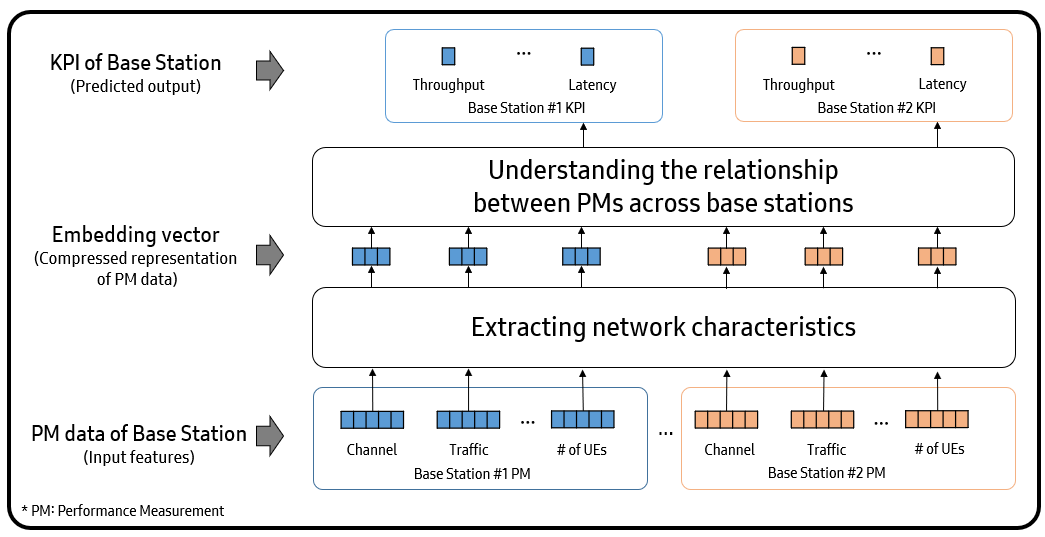

Figure 3. Network Optimization Model based on Transformer Architecture

Samsung’s AI model implements this expert-like reasoning process through a Transformer-based architecture [1], which learns complex dependencies among network performance measurements (PMs) that reflect the base station state and environmental conditions. This capability enables the model to accurately infer the underlying network state.

Figure 3 illustrates the overall architecture of our model. The model first extracts the latent state of a base station by encoding both internal characteristics and external environmental factors, while simultaneously learning interactions among neighboring base stations. This latent representation allows the model to estimate how KPI values would change in response to specific parameter configurations.

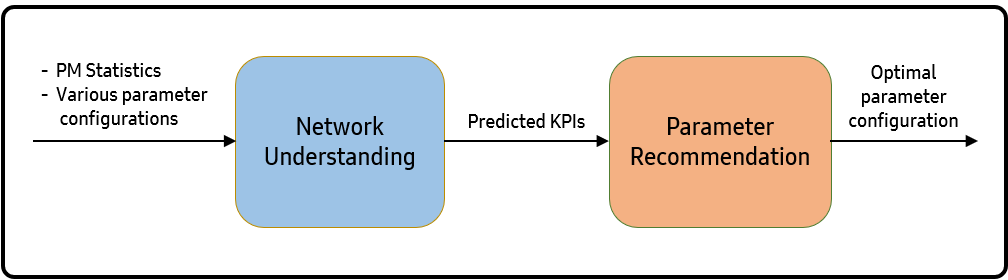

2-2. What the AI Model Does

Figure 5 demonstrates how the AI model works. The model processes the network data representing the current network status, the parameters currently in use, and a set of candidate optimal parameters. It then predicts the network performance for each of the candidates. Based on these predictions, the parameter recommendation module suggests the best parameters to maximize the target KPIs. The entire model is designed to work seamlessly, ensuring that training and inference are performed in an efficient and effective manner.

Figure 4. AI Modeling for network optimization

2-3. Real Impact in Live Network

We implemented our AI model to real-world deployments across two regions in South Korea. [2]

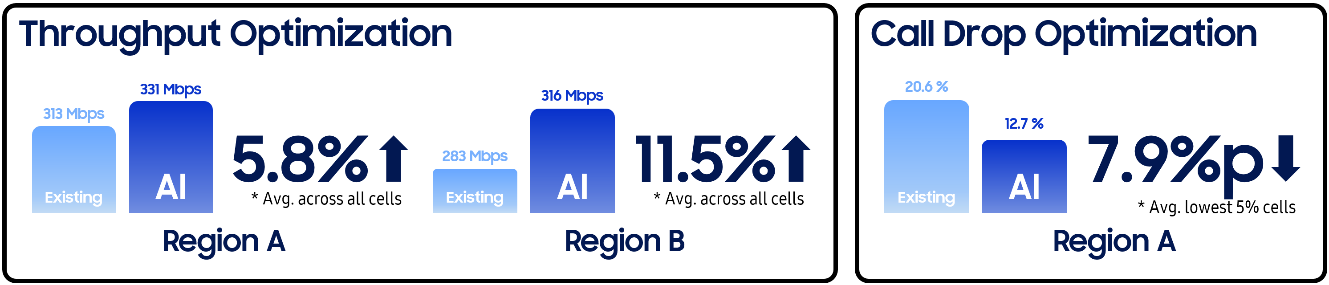

Field Trial 1 focused on improving throughput in two separated regions with different user density. On average, 8.65% improvement has been observed (5.8% in region A and 11.5% in region B) as in Figure 5.

Field Trial 2 aimed at reducing the call drop rate. We observed that base stations with high call drop rate experienced significant reductions, while those with low call drop rates remained largely unchanged.

These outcomes were achieved without costly manual interventions or network downtime, highlighting how AI can adapt to environmental variability and consistently enhance performance across diverse regions. The results are shown in Figure 5.

Figure 5. Field Trial Results

3. AI-based User-level Mobility Optimization

3-1. Approach and Challenges

Some users may repeatedly experience service interruptions, such as disconnections while using services like video streaming or voice call, even when the overall cell performance appears satisfactory. These problems can significantly impact the actual user-perceived service quality, but cannot be resolved through the cell-level optimization. This limitation arises because cell-level optimization detects problems based on averaged cell performance indicators, which are insufficient for capturing the recurring problems experienced by individual users.

One characteristic of such user-specific issues is that they are related to contextual factors such as user behavior patterns. In particular, these issues may occur repeatedly along specific routes frequently traveled by users. In addition to mobility, these problems are also affected by device-related factors. Since capabilities such as radio measurements vary across user devices, the same route may cause recurring problems exclusively for users with specific device types.

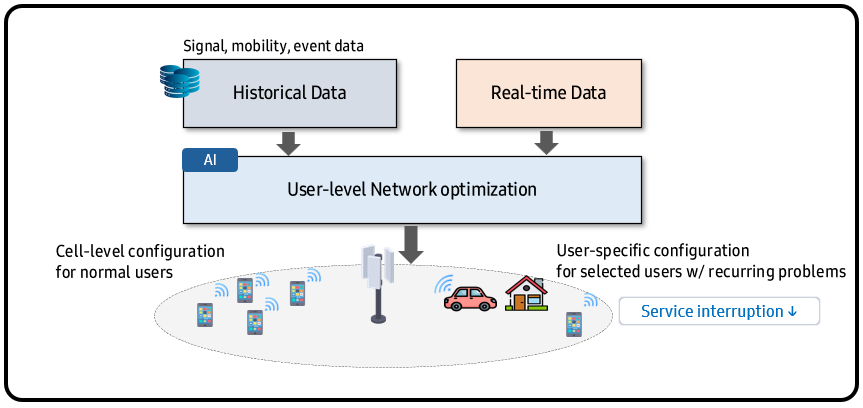

Figure 6 shows the conceptual illustration of User-level mobility optimization which addresses these challenges. By using historical data, we could extract patterns of recurring problems experienced by individual users. This is achieved by leveraging AI on information related to the route where issues occur.[3] Based on these derived patterns, potential problems that may occur along the problematic path can be predicted and proactively prevented.

Figure 6. Concept of User-level Mobility Optimization

Challenge #1: Target User Selection

Since these user-specific problems are difficult to detect through network performance monitoring, it is challenging to select appropriate users to apply the user-level optimization.

We conducted comprehensive data analysis of historical data, including failure history, mobility and measurement data, to identify those who experienced significantly worse perceived service quality and displayed recurring issues along specific routes or in particular locations.

To address challenges beyond cell-level optimization, we focused on identifying users with poor network performance tied to specific paths or locations. For instance, the following criteria can be considered to determine the target user.

Challenge #2: Detection of Recurring Problems

After identifying the target users, detecting and addressing the recurring problems that they face remains a significant challenge. By integrating both user-level historical data and RAN real-time data, our approach effectively tackles this challenge.

Leveraging AI, we extracted patterns of repeated problems from users’ historical data. Based on these patterns and RAN real-time data, the network can predict whether the repeated problem is likely to occur. When the recurring problem is anticipated, the network can adjust mobility configuration on a user-specific basis to prevent it.

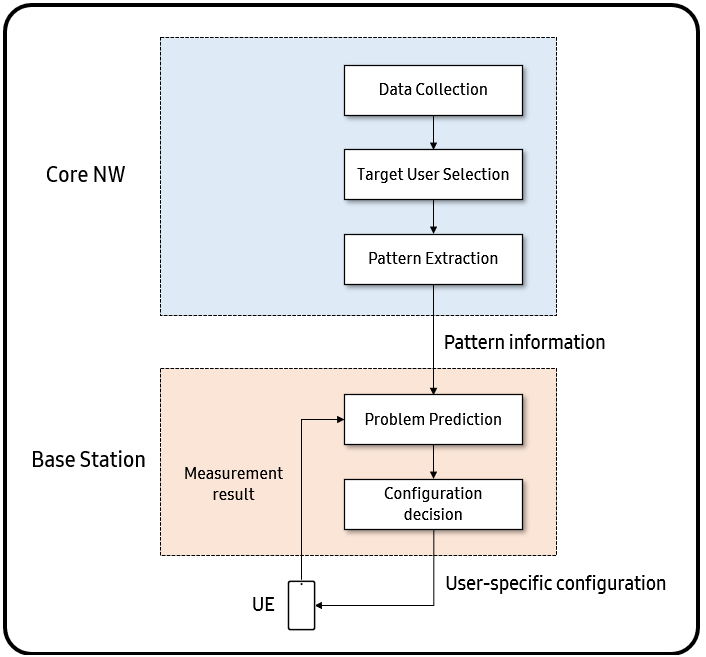

3-2. Operational flow

Figure 7 shows the operational flow of our User-level Mobility Optimization solution. This process involves coordinated actions executed in both the Core Network (NW) and the base station.

In the Core NW, the system analyzes collected data to identify the connection failure scenarios and selects target users for applying user-level optimization. Using AI, it extracts data patterns from these problem cases, which are then transmitted to the base station where the target user is currently connected.

In the base station, it predicts whether a recurring problem is likely to occur based on real-time measurement data and the previously delivered patterns from the Core NW. Then, if the prediction results indicate a high likelihood of the problem, user-specific configuration is applied to prevent the problem. These configurations can vary depending on the type of problem, but the most common solution involves adjusting mobility configuration, such as handover the target user to a cell operating on an alternative frequency band.

Figure 7. Operational Flow of User-level Mobility Optimization

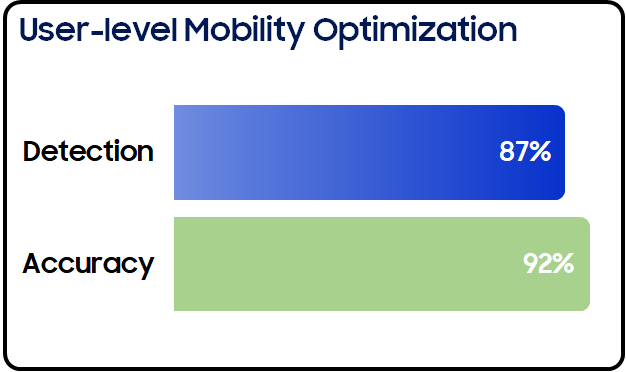

3-3. Verification with Commercial network Data

We validated our user-level mobility optimization solution using real-world commercial network data in collaboration with operators.[4] The evaluation focused on verifying the solution’s capability to proactively detect the connection failures experienced repeatedly by specific users. Specifically, we evaluated two key metrics: detection performance which indicates the detection rate at which connection failure events on problematic path were identified, and problem detection accuracy.

As illustrated in Figure 8, our approach demonstrates strong efficacy in resolving user-specific problems. Based on commercial network data, we achieved a detection rate of 87% for connection failure cases involving specific users, with an accuracy of 92%. Further analysis revealed that detection performance varies significantly based on individual user behavior pattern, particularly their frequency of visiting problematic path and encountering associated issues.

Figure 8. Validation Results

4. What’s Next: Toward Fully Automated Optimization

Samsung Research is conducting research on how to automate the entire optimization loop – from intelligent parameter selection to model training, testing, and deployment. The ultimate vision is to integrate this system with telco-specific large language model (LLMs), enabling network operators to interact with the optimization system using natural language. Our roadmap is shown in Figure 9.

We are transitioning from manual optimization to AI-based Network-level and User-level Optimization. As the next step, we are preparing for the integrated optimization that balances network-level performance and user-perceived service quality. Ultimately, we aim to achieve intent-driven optimization powered by LLMs.

Imagine saying, “Improve video quality in Seoul” or “Enhance Alice’s conference call experience” and having the system automatically adjust relevant parameters across all affected base stations in real time. This goes beyond AI automation. It lays the foundation for a fully self-optimizing network.

Figure 9. Samsung Research’s Roadmap of AI-based Network Optimization

5. Conclusion

In this blog, we have demonstrated how AI can be used to optimize network and user performance. Traditionally this process has been carried out by human expert, which is time-consuming and cost-intensive. AI, however, identifies patterns in commercial network data and optimizes the behavior of both the network and users in a fraction of the time. We have shown that our AI models deliver significant performance improvements in live networks, and we have outlined the future direction of network intelligence technology in Samsung Research.

By combining domain expertise with AI innovation, Samsung Research is not only enhancing network performance and end-user experiences but also redefining how networks are managed. These advancements reinforce our leadership in intelligent network automation and pave the way for telco-grade AI to become a standard in next-generation communications

We are excited about what lines ahead and this is just beginning.

References

[1] Vaswani et al, “Attention is all you need,” Advances in neural information processing systems 2017.

[2] “SK Telecom, Samsung use AI to optimize 5G base stations”, RCR Wireless News, October 28, 2024, https://www.rcrwireless.com/20241028/5g/sk-telecom-samsung-use-ai-optimize-5g-base-stations

[3] R. Shafin, L. Liu, V. Chandrasekhar, H. Chen, and J. Reed, and J. C. Zhang, “Artificial intelligence-enabled cellular networks: A critical path to beyond-5G and 6G,” IEEE Wireless Commun., vol. 27, no. 2, pp. 212-217, Apr. 2020.

[4] “KT and Samsung Electronics Achieve Joint Success in AI-Powered Wireless Network Optimization”, Korea IT Times, June 26, 2025, https://www.koreaittimes.com/news/articleView.html?idxno=142843