Communications

Beyond Heuristics: Forging the AI-Native RAN with AI L2 Radio Resource Scheduling

Samsung Research is preparing for future wireless technologies with convergence research of AI and communications, in which AI-native approaches drive designing and operations of entire RAN (Radio Access Network) and CN (Core Network). In the blog series of Communication-AI Convergence, we are planning to introduce various research topics of this application:

#1 Modem I

#2 Modem II

#3 Protocol/Networking & Operation

#4 Radio Resource Scheduler

This blog will cover the implementation of real-time AI within the radio resource scheduler, exploring how to achieve near-optimal performance in dynamic wireless environments.

Introduction

Over the past three decades, dedicated to the evolution of communication networks, their algorithm and artificial intelligence are evident that we are on the cusp of a profound paradigm shift. The progression from 5G to future 6G networks signifies more than an incremental increase in bandwidth; it represents the emergence of a vast, heterogeneous ecosystem of interconnected devices. The viability of future-generation services—from autonomous systems and telepresence to city-scale Internet of Things (IoT)—has been relying on solving one fundamental challenge: the intelligent and efficient management of finite radio spectrum in an environment of unprecedented complexity. The major challenge lies in the Media Access Control Layer 2 (MAC L2) radio resource scheduler and its algorithm.

Historically, the MAC scheduler has functioned as the central management of radio resources, employing heuristic, rule-based algorithms such as Proportional Fair (PF) to manage network access. These static, pre-programmed schedulers were sufficient for previous generations of mobile communication, which were characterized by relatively homogenous traffic and predictable channel dynamics. However, they are no longer sufficient to meet the demands of the current landscape. The 5G and 5G-Advanced era is characterized by the need to support heterogeneous services with diverse Quality of Service (QoS) requirements—ranging from the stringent low-latency demands of URLLC (Ultra-Reliable Low Latency Communications) to the high-throughput needs of eMBB (enhanced Mobile BroadBand)—across a stochastic and non-stationary radio environment. This combinatorial increase in complexity causes traditional heuristic or rule-based approaches to be suboptimal, which leads to inefficient spectrum utilization, violations of service-level agreements, and a degraded Quality of Experience (QoE).

To address these challenges, this work includes an AI-native, real-time radio resource scheduling. We will demonstrate its capacity to autonomously adapt to dynamic network conditions and service loads, outperforming conventional scheduling structures significantly. The demonstration results in empirical evidence that this approach yields quantifiable gains in spectral efficiency, network throughput, and user satisfaction. We argue that embedding such intelligence directly into the MAC layer is not merely an enhancement but a cornerstone technology, essential for building the truly cognitive and autonomous radio access networks that will define the 6G era.

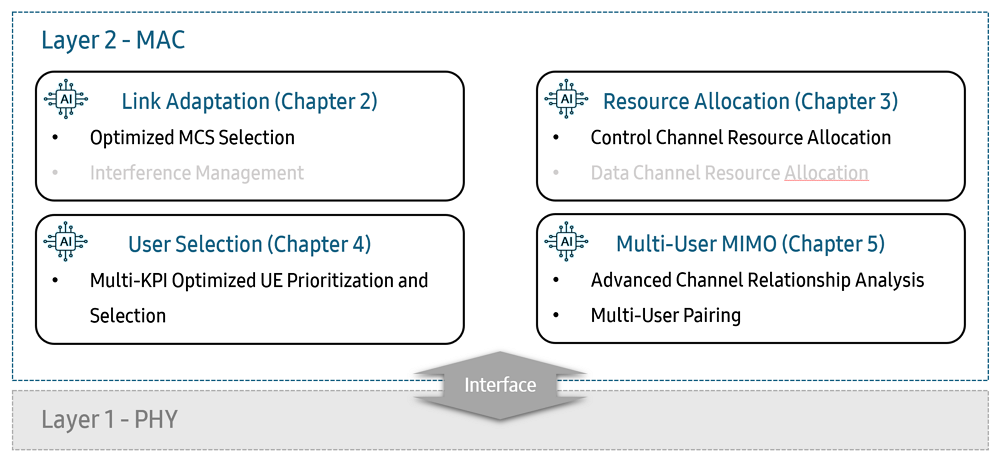

In this blog, we represent the scheduling process not only as a single decision but as an optimization problem targeting deeply coupled multiple KPIs. To achieve true system-level optimality, four key functions in an L2 scheduler must be orchestrated in harmony: Link Adaptation, Resource Allocation, User Selection, and Multi-user Multiple-Input Multiple-Output (MU-MIMO) technologies. (See Figure 1.) In each of these domains, classical methods reach a performance ceiling, whereas a real-time AI, particularly a Deep Learning (DL) or Reinforcement Learning, can navigate the high-dimensional problem space to unlock unprecedented efficiency. In the sequel, we will examine the details of each function’s role and benefits in communication KPIs. Note that some crucial topics, such as inter-cell interference management and data channel resource allocation by AI, have been addressed for generalization and will be presented in the next step.

Figure 1. L2 Scheduler’s Key Functions and Overall View

2. Link Adaptation: From Reactive to Predictive Control

Link Adaptation is a process of selecting the most suitable Modulation and Coding Scheme (MCS) for a user's specific channel conditions to maximize data rate while ensuring the block error rate remains below a predefined target. The conventional approach follows a reactive method, relying on a recently reported Channel Quality Indicator (CQI) to determine the MCS level, which means it is perpetually a step behind, as the channel may have already changed by the time of transmission. This leads to inefficient data transmission due to either additional Hybrid Automatic Repeat Request (HARQ) retransmissions caused by a higher MCS or lower MCS itself.

AI enables a paradigm shift to predictive link adaptation. By analyzing the time-series data of a user's channel quality, an AI model can accurately forecast the likely channel state in the subsequent transmissions. This AI model allows it to select a more aggressive and precise MCS level, pushing the data rate closer to the true channel capacity. By minimizing prediction errors, the AI can reduce retransmission overhead and achieve optimal performance.

2.1 AI-based Optimal MCS Selection

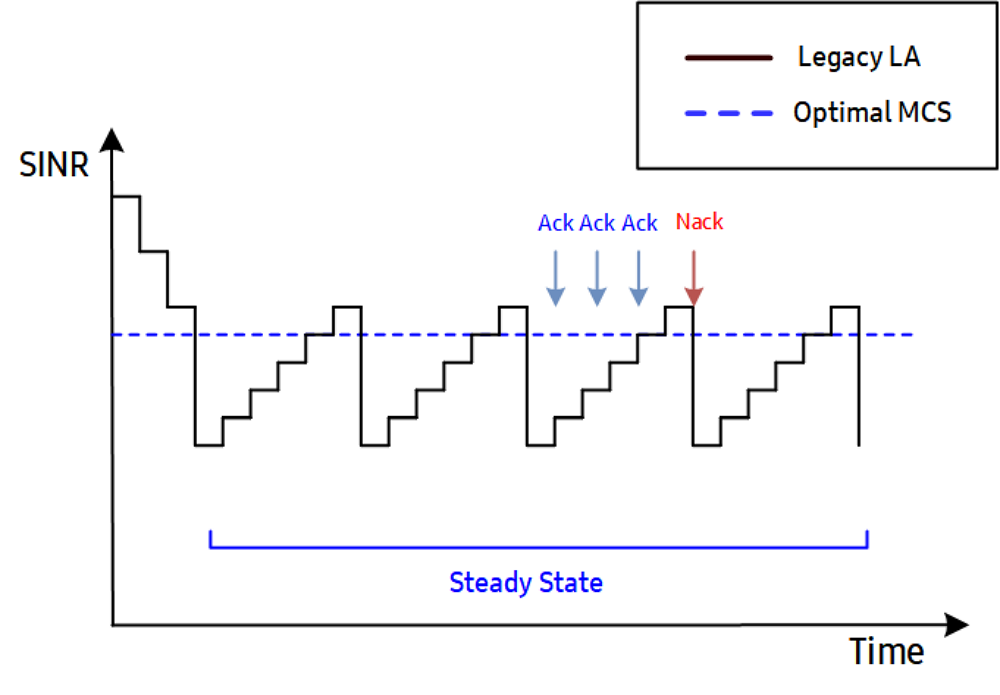

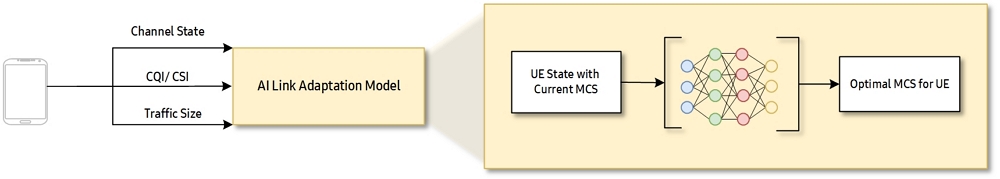

In the existing system, Outer Loop Link Adaptation (OLLA) algorithm has been primarily used to improve transmission efficiency. The estimated signal-to-noise-ratio (SINR) values are continuously adjusted by an OLLA offset based on positive and negative acknowledgements (ACK/NACK) sent by the UE [1]. However, this OLLA approach relies solely on past feedback results, reducing accuracy in fast-fading channel environments, such as those with high-speed mobility. Additionally, even in a stable channel state shown in Figure 2, MCS fluctuations are unavoidable, resulting in a degradation of overall spectral efficiency. Beyond these limitations, as the notion demonstrated in Figure 3, we can use AI to predict the optimal MCS based on each user's channel status and traffic characteristics.

Figure 2. AI Link Adaptation Motivation

Figure 3. AI’s Role for Optimized MCS Selection

The AI model for link adaptation offers several key advantages. Firstly, it significantly increases spectral efficiency by minimizing retransmissions, ensuring more efficient use of available bandwidth. Secondly, it enhances user experience by improving data rates and providing a more consistent and reliable connection, even during sudden channel changes. These benefits are particularly valuable in dynamic environments, such as high-speed mobility scenarios, where traditional algorithms struggle to maintain performance.

From the preliminary test results, AI-driven link adaptation demonstrates an average improvement of 5-10% downlink throughput compared to the conventional link adaptation. We conducted experiments in a laboratory environment with 5 real devices (Galaxy S25) operating under medium-low signal strength and non-line-of-sight (NLOS) conditions. The BS was equipped with commercial virtualized RAN stack with 100MHz bandwidth for a cell, and massive MIMO with 64 antennas for transmitting/receiving signals. This performance gain highlights the potential of AI to optimize network performance and deliver tangible benefits in real-world applications.

3. Resource Allocation: Solving the High-Dimensional Puzzle

Once candidate users are selected for the transmission, the scheduler performs air resource allocation, assigning specific time-frequency Resource Blocks (RBs) to each. This resource allocation has been defined by a classic non-convex optimization problem where the allocation to one user directly impacts all others through potential interference. Traditional algorithms use simplified models or iterative methods that cannot guarantee an optimal solution in real-time.

An AI agent, however, can learn the intricate, non-linear relationships between RB assignments and overall cell throughput. It learns to "see" the entire allocation matrix at once, placing users in spectral locations that not only suit their channel conditions but also proactively minimize inter-user interference. It effectively learns a solution to a computationally intractable assignment problem, resulting in a more densely packed and efficient use of the spectrum and thus achieving near-optimal spectral efficiency.

3.1. AI-based Control Channel Resource Allocation

In a wireless communication network, the Base Station (BS) is responsible for dynamically scheduling User Equipments (UEs) to ensure efficient utilization of radio resources. Scheduling information is carried by downlink control information (DCI) through Physical Downlink Control Channel (PDCCH). Based on the current 5G standard, PDCCH consists of Control Channel Element (CCE) as the basic resource unit and the number of CCEs allocated for each DCI transmission can vary depending on the channel quality of PDCCH from the BS to the UE. Since the control channel resources are limited in a slot, an approach to efficient resource management becomes crucial to transmit multiple DCIs simultaneously.

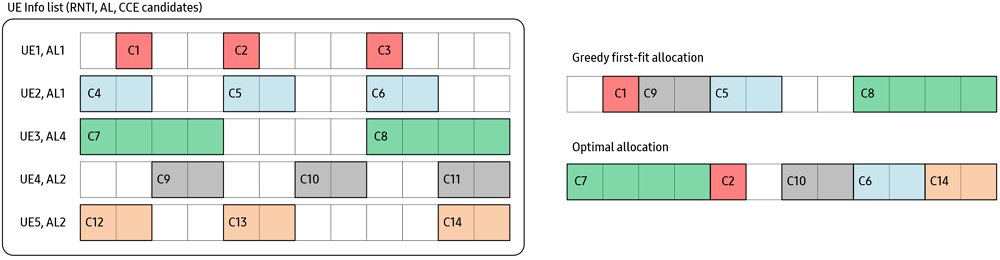

Figure 4. Example of greedy CCE allocation and optimal CCE allocation

The challenge lies in mapping CCEs to multiple UEs in a way that maximizes scheduling opportunities. The conventional allocation approach often leads to inefficiencies, where, despite having remaining CCEs, not all UEs can be successfully scheduled due to constraints on the number of required CCEs for successful decoding of DCI, considering the channel condition of the UE and CCE allocation rules. This results in underutilization of valuable control resources and degraded overall system throughput. Figure 4 shows an example of greedy CCE allocation and optimal CCE allocation results, where the efficiency of CCE allocation varies with the allocation algorithm, even under the same set of candidate UEs.

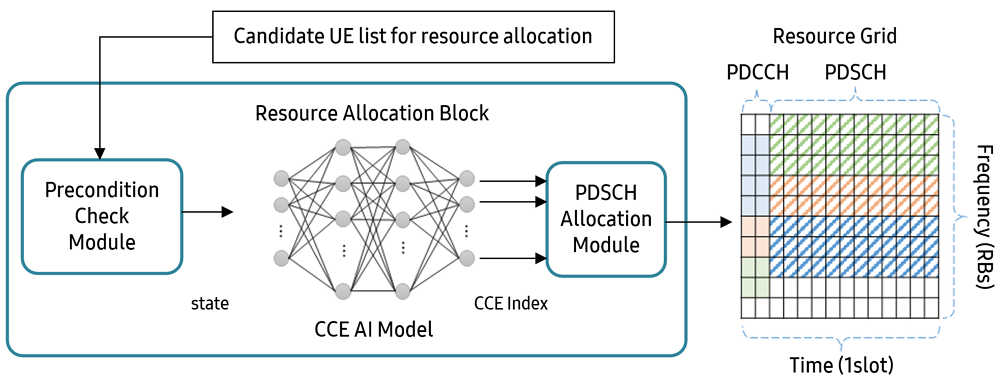

To address this challenge, we have developed an AI-based CCE allocation algorithm that can learn the optimal mapping strategy (Figure 5). This model is designed using a reinforcement learning framework, allowing each UE to be allocated CCEs while considering the allocation status of other UEs. To ensure real-time performance within a slot, we optimized the model through lightweight design and hardware acceleration while minimizing inference latency.

Figure 5. AI-based CCE allocation model and inferencing

To evaluate the performance of the AI-based CCE allocation algorithm, we conducted experiments in a laboratory environment with 12 real UEs (Galaxy S25) operating under medium-low signal strength and non-line-of-sight (NLOS) conditions. The BS was equipped with commercial virtualized RAN stack with 100MHz bandwidth for a cell, and massive MIMO with 64 antennas for transmitting/receiving signals. The result showed that the AI-based CCE allocation algorithm improved the PDCCH allocation success ratio and downlink throughput by 1.9% and 1.5%, respectively.

4. User Selection: Beyond Greedy Metrics

User selection involves choosing a subset of users to be scheduled in a Transmission Time Interval (TTI). Heuristic user selection typically relies on simplified, greedy metrics, such as instantaneous channel quality or a fairness-throughput tradeoff (e.g., proportional fairness). These methods may be effective, but they have limitations in that they do not consider the QoS requirements of various services or the long-term impact of prioritizing one user over another.

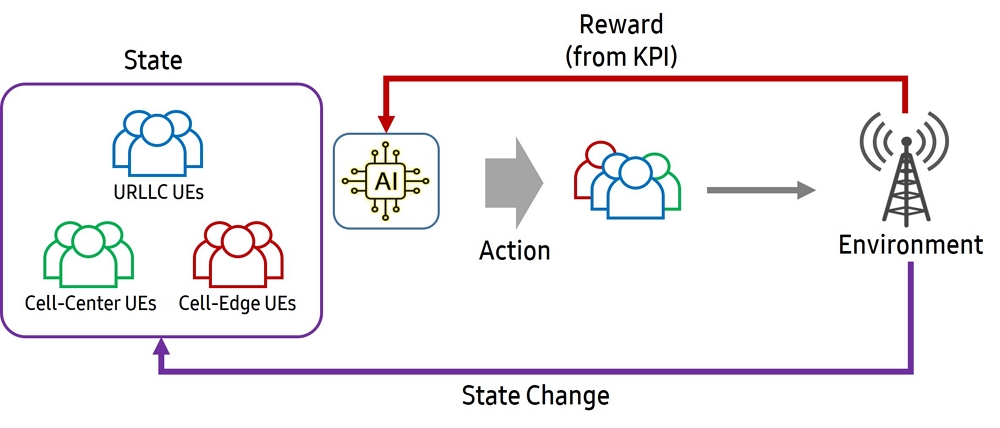

An AI-powered scheduler transforms this into a holistic optimization problem. By processing a rich state space—including user buffer status, historical channel data, latency requirements, and service priority—a Deep Reinforcement Learning (DRL) agent learns a policy that maximizes a long-term, network-wide reward. For example, it can learn to prioritize a URLLC user with a near-empty buffer to prevent a service failure, even if their channel quality is momentarily poor, understanding that this decision contributes more to the overall system utility than serving a high-throughput user with a large buffer. This way is how AI moves beyond simple fairness and approaches true QoS-aware optimality.

4.1. Multi-KPI Optimized UE Prioritization and Selection

A representative and widely known example, the PF scheduler, aims to balance cell throughput and fairness by using a metric that combines each user’s channel condition and transmission history [2]. However, this approach has limitations in meeting complex Key Performance Indicator (KPI) requirements, as it cannot effectively account for other KPIs, such as Internet Protocol (IP) throughput (TS 28.554), which is a key indicator of user experience. Figure 6 illustrates this limitation.

Figure 6. Multi-KPI Achievability in RAN KPIs

One way to address this limitation is to combine the existing PF scheduler’s metric with an additional metric that considers other KPIs. However, as the number of metrics increases to satisfy various KPI requirements, determining the appropriate weight for each metric becomes increasingly complex, and it becomes more difficult to predict the scheduling outcome in advance.

Figure 7. Reinforcement Learning based AI User Selection

DRL can be utilized to overcome this problem. The scheduler defines the main KPIs—observed as outcomes of scheduling actions over a certain period—as the reward, and gradually learns the optimal scheduling policy through this process. Furthermore, since tradeoffs exist among KPIs, defining the reward as a weighted sum of each KPI allows the scheduler to be customized and trained according to the performance goals set by the network service provider. Figure 7 illustrates the learning framework of the RL-based scheduler.

We first took a stepwise approach to verify the potential of AI-based scheduling before tackling the full complexity of multi-KPI optimization. We trained the scheduler model to focus on improving a single KPI — IP Throughput. As a result, the scheduler model successfully learned to prioritize users for scheduling, achieving approximately 150% improvement of user perceived throughputs compared to the PF baseline. We conducted experiments in commercial virtualized RAN stack with 100MHz bandwidth for a cell, using a UE emulator setting of 90 UEs (mixed locations of strong/mid/weak signal strength). This result demonstrates that AI-based scheduling can indeed learn and reinforce behavior that improves specific KPIs, even without explicit rule-based adjustments.

This approach enables the scheduler to discover an optimal policy based on actual network conditions and user characteristics, rather than relying on a predefined, fixed combination of metrics. Consequently, it can adapt flexibly to diverse traffic patterns and dynamic wireless environments, thereby achieving the target KPIs.

5. Multi-user MIMO: Taming Combinatorial Complexity

MU-MIMO is one of the key technologies of 5G systems that significantly enhances spectral efficiency by simultaneously serving multiple spatially separated users on the same time-frequency resource [3-4], and it is performed at the BS according to the following operational procedures:

Step 1. Channel acquisition: The BS acquires channel vectors between BS and users. In the TDD system, the BS acquires uplink (UL) channel information through the sounding reference signal (SRS) and utilizes it as downlink (DL) channel information for MU-MIMO operations based on channel reciprocity.

Step 2. Channel relationship analysis: The BS calculates the spatial relationship between users based on the acquired channel information. This relationship information served as a key indicator representing the channel orthogonality between users and the efficiency of MU-MIMO pairing. While calculating channel relationships, it is well known that there is a tradeoff between computational complexity and calculation accuracy.

Step 3. MU pairing: Based on channel relationship, the BS performs MU scheduling to optimize performance metrics such as PF. The BS selects user groups maximizing spatial multiplexing gains while minimizing inter-user interference by choosing users with low mutual spatial correlation.

Step 4. Beamforming: The BS generates beams for each selected user using techniques including Singular-Valued-Decomposition (SVD), Zero-Forcing (ZF), Block-Diagonalization (BD), and Regularized Zero-Forcing (RZF) to minimize interference between users.

However, the primary challenge of this MU-MIMO lies in the user grouping problem, which involves selecting users with sufficiently orthogonal channels to minimize inter-user interference. This is an NP-hard combinatorial problem, and traditional methodologies depend on heuristic approaches that are inherently imperfect and computationally expensive in identifying compatible user groups. Commercial systems often employ sub-optimal strategies, striking a balance between computational demands and acceptable performance levels [5].

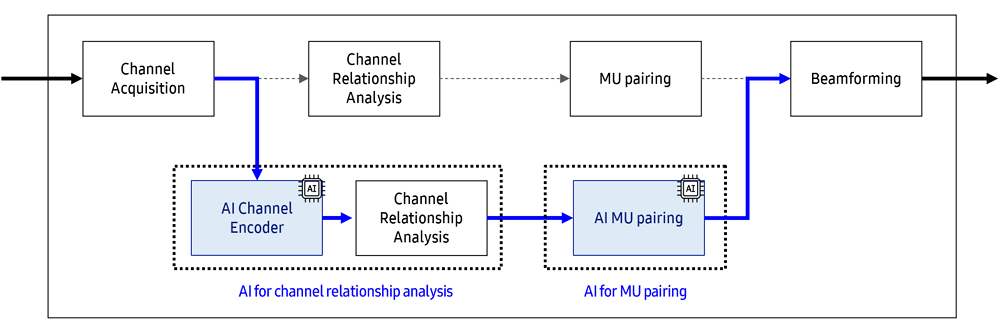

AI is leveraged to reduce operational complexity while achieving performance improvements from two perspectives: assisting channel relationship analysis and replacing traditional MU pairing approaches, as shown in Figure 8.

Figure 8. Leveraging AI for MU-MIMO

5.1. AI Encoder-based Advanced Channel Relationship Analysis

An AI Channel Encoder transforms received channel vectors from SRS into latent vectors required for MU scheduling. The encoder extracts essential features from channel vectors, converting complex two-dimensional channel vectors into real one-dimensional latent space representations for efficient channel relationship analysis. In the encoding process, SRS channel estimation results are converted into real-valued latent vectors, similar to extracting features from images, where underlying channel structures are identified and captured. These vectors are then analyzed to determine mutual relationships, which are incorporated into each vector to capture interdependencies between users, thereby enhancing MU pairing accuracy. The proposed AI-Channel-Encoder provides several benefits:

Reduced Complexity: By selecting an appropriate loss function, channel relationships are calculated using simplified distance metrics instead of computationally intensive complex-valued operations. For example, if the BS has 64 antennas and the length of the latent vector is 32, computational complexity is reduced by 87.5%.

Enhanced Performance:Unlike conventional methods that reduce SRS dimensionality, which often result in imprecise channel relationships, latent vectors enable more accurate channel relationship analysis by compressively retaining essential SRS information. Based on system-level simulations for 12 cells (100MHz bandwidth for each cell, massive MIMO with 64 antennas at the BS) and 12 UEs per cell, we demonstrated that the AI-Channel-Encoder achieved a 5% gain in DL throughput compared to conventional methods.

Improved Scalability: The lightweight design efficiently manages a large number of users, making it suitable for high-density scenarios.

5.2. AI-based Multi-User Pairing

Selecting the appropriate users for MU-MIMO transmission has a critical impact on system performance. This is known as an NP-hard problem, meaning the optimal solution is computationally infeasible. Commercial systems employ low-complexity sub-optimal algorithms for real-time operation but often fail to fully exploit the potential performance benefits of MU-MIMO technology [5]. AI-MU-Pairing aims to leverage machine learning techniques to explore the complex solution space more efficiently than commercial algorithms through two approaches:

Supervised Learning: Supervised learning requires labels representing optimal or near-optimal MU pairs, which are generated using high-complexity algorithms that are impractical for commercial deployment. Once trained, the AI model replicates these results with significantly reduced computational complexity. The primary challenge lies in generating high-quality labels with limited computational resources, as an exhaustive search remains infeasible.

Reinforcement Learning: Reinforcement learning is particularly well-suited for problems where finding optimal solutions is challenging, making it a good fit for solving the MU-pairing problem. The approach of this learning removes the need for labeling algorithms and their associated complexity, offering potential for near-optimal performance through efficient exploration of the search space. The main challenge is how to design practical reward functions, as RL performance is highly dependent on the design of the reward function.

The vision of AI-MU-Pairing is to achieve improved performance under real-time constraints, offering a practical balance between computational feasibility and solution quality that traditional approaches struggle to achieve. To this end, we are considering both supervised learning and reinforcement learning approaches which provide the potential of this approach to address complex optimization challenges effectively. Based on system-level simulations for 12 cells (100MHz bandwidth for each cell, massive MIMO with 64 antennas at the BS) and 12 UEs per cell, our preliminary results indicated an average DL throughput improvement of over 10% in specific cases.

6. Conclusion

In summary, conventional heuristic-based L2 schedulers are insufficient for managing the combinatorial complexity inherent in 5G and future 6G networks. These legacy systems cannot adequately address the coupled, multi-objective optimization problem spanning Link Adaptation, User Selection, Resource Allocation, and Multi-user MIMO, which is essential for heterogeneous service multiplexing. This blog explores the integration of AI-native L2 schedulers as the enabling technology to transcend these limitations. Deep learning-based AI transforms the scheduler from a reactive to a proactive one that long-term, network-wide objectives rather than myopic metrics, enabling predictive and holistic resource management. The development of an AI-native L2 scheduler is, therefore, a foundational technology for realizing the competitive edge in performance and service guarantees envisioned for the 6G era.

References

[1] E. Peralta, G. Pocovi, L. Kuru, K. Jayasinghe, and M. Valkama, “Outer loop link adaptation enhancements for ultra reliable low latency communications in 5G,” in Proc. IEEE 95th Veh. Technol. Conf., Jun. 2022, pp. 1-7.

[2] Y. Barayan and I. Kostanic, “Performance evaluation of proportional fairness scheduling in LTE,” in Proc. World Congr. Eng. Comput. Sci., vol. 2, 2013, pp. 712–717.

[3] T. Yoo and A. Goldsmith, “On the optimality of multiantenna broad-cast scheduling using zero-forcing beamforming,” IEEE J. Sel. Areas Commun., vol. 24, no. 3, pp. 528–541, Mar. 2006.

[4] T. L. Marzetta, "Massive MIMO: An introduction," Bell Labs Technol. J., vol. 20, pp. 11-22, Mar. 2015.

[5] E. Castañeda, A. Silva, A. Gameiro and M. Kountouris, "An overview on resource allocation techniques for multi-user MIMO systems," IEEE Commun. Surveys Tuts., vol. 19, no. 1, pp. 239-284, Feb. 2017.