Communications

From AI Concept to Real World: AI-powered Modem for Next Samsung Cellular Networks

|

Samsung Research is preparing for future wireless technologies with convergence research of AI and communications, in which AI-native approaches drive designing and operations of entire RAN (Radio Access Network) and CN (Core Network). In the blog series of Communications-AI Convergence, we are planning to introduce various research topics of this application: #2. Modem II #3. Protocol/Networking & Operation #4. Radio Resource Scheduler This blog will cover AI applications to channel estimation, channel prediction, and signal validation, which have significant impacts on spectral efficiency and transmission performance. |

1. Introduction of AI-powered Cellular Network

In recent years, artificial intelligence (AI) has emerged as a promising tool for advancing the performance of wireless communication systems. Numerous studies have outlined the necessity of AI-native architectures in future 5G Advanced and 6G networks, highlighting AI’s ability to address long-standing issues in efficiency, reliability, and sustainability [1, 2]. Samsung has taken early steps to explore this potential by launching pilot projects centered on applying AI at the cell site. This blog presents one of the first testbeds for cell site AI applications, designed to fill the gap in real-time AI deployments that yield tangible benefits.

Our discussion focuses on two main areas. First, we introduce Samsung’s virtualized RAN (vRAN) infrastructure equipped with AI engine for AI evaluation. Second, we implement and evaluate an AI-based L1 function of channel estimation (CE) within the vRAN to evaluate its performance. Although AI continues to attract attention across the wireless domain, its practical integration at L1/L2 still raises questions regarding feasibility and measurable gains in real-world networks. Through this blog, we propose a field-driven approach to accelerate AI adoption in RAN, and showcase a complete workflow from model design to deployment in a commercial system. The AI engine is embedded in a commercial virtual distributed unit (vDU), and its effectiveness is validated through end-to-end (E2E) over-the-air (OTA) testing.

In MWC 25, Samsung showcased a virtualized network architecture enhanced with AI capabilities [3]. As part of this initiative, our testbed demonstrates the feasibility of deploying AI-driven L1 functions at the cell site using a vDU equipped with an AI engine. This setup shows that AI models can be seamlessly integrated into the network elements’ processing pipeline, enabling direct validation of their performance and practical benefits within the live network infrastructure.

Figure 1. Samsung’s virtualized network deployment with AI processing

Figure 1 illustrates how AI processing is integrated across the virtualized network—from centralized to edge and cell-site levels—each playing a critical role in realizing intelligent RAN behavior. At the cell site—closest to end users—there is a growing demand for AI solutions capable of improving air-link performance with millisecond speeds, enabled by accurate channel condition estimation. Centralized architectures, however, often face challenges in meeting these real-time requirements, particularly when AI interface is involved. To address this, Samsung adopts a distributed network design in which AI acceleration hardware is deployed not only at central data centers but also at the edge and cell site levels. Once a virtualized platform is established on a given network element, it can flexibly host AI-driven functionalities—whether for service optimization or internal RAN data processing.

2. AI-powered Samsung Network for vRAN

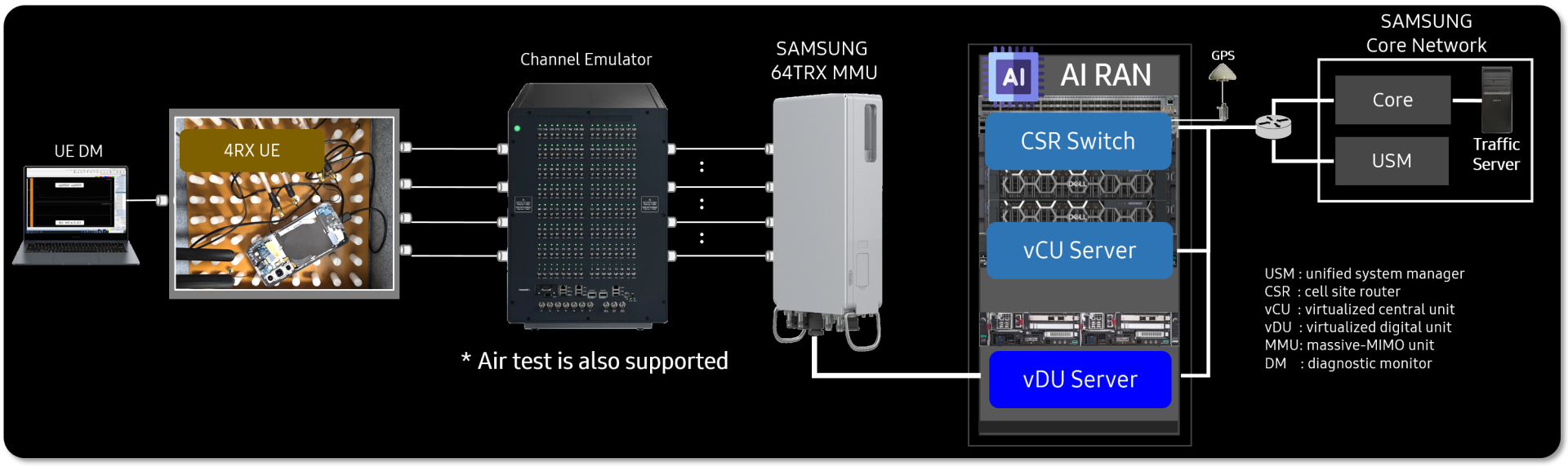

Figure 2. AI powered Samsung network diagram for AI RAN

Figure 2 presents Samsung’s cell site AI RAN testbed, built on commercial network infrastructure and augmented with an AI engine to enable AI-based L1/L2 processing. In this setup, AI workloads are offloaded to the AI processor, allowing the AI-CE function to run in real time. This on-site architecture offers a realistic testbed for evaluating AI-driven features under real-world network conditions. To assess its performance, we conduct E2E evaluations using traffic between a 64-TRX MMU and S24 user equipment (UE). The framework supports streamlined deployment, testing, and optimization of AI models across both physical and virtualized environments, helping accelerate innovation throughout the RAN stack.

3. AI-based Downlink Enhancement

With the architectural foundation in place, we go down to a concrete example of AI deployed at the cell site: improving real-time uplink CE and downlink beamforming using Sounding Reference Signals (SRSs). SRS serves as a key uplink reference, enabling the base station to gather channel information critical for downlink precoding and beamforming, and its function directly affects overall system performance and reliability. However, conventional CE techniques often struggle in realistic scenarios due to noise, interference, and the fast-changing nature of wireless channels. To address these challenges, we leverage AI methods improving the accuracy and robustness of SRS-CE.

Our main approach centers on AI-based denoising of the instantaneous SRS signal to recover a cleaner and more reliable representation of the channel. This improves the precision of downlink beamforming, which in turn contributes to increased throughput. While additional AI functions like channel prediction can complement this, our focus in this blog is on the enhancement of real-time CE through AI-driven denoising.

3-1. AI based SRS Channel Estimation

Figure 3. An illustration of AI-SRS-CE solution

Denoising plays a pivotal role in SRS-CE, as noise can severely reduce the accuracy of the estimated channel and negatively affect beamforming performance. Interestingly, the problem of denoising SRS signals shares many similarities with image denoising—an area where AI has shown remarkable success [4]. In multiple-input multiple-output (MIMO)-based wireless systems, channel responses are often impaired by multiple noise sources, including thermal noise, interference, and multipath effects. Extracting accurate channel information from such noisy SRS input is conceptually similar to reconstructing clean images from degraded ones. This parallel serves as a strong rationale for applying AI-powered denoising methods—originally developed for image restoration—to the SRS-CE domain.

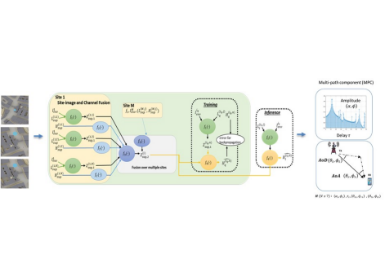

As shown in Figure 3, our AI-SRS-CE architecture is composed of three key blocks: a pre-processing module, an AI-based channel estimator, and a post-processing module. The pre- and post-processing components are responsible for addressing RF distortions and mitigating interference, while the AI estimator functions as the denoising mechanism. This structure supports more accurate reconstruction of the wireless channel and contributes to improved downlink performance in practical scenarios.

To bring this denoising-based framework into real-world use, we train an AI model tailored to capture the distinct properties of wireless signals. Specifically, we apply domain insights that wireless propagation typically involves only a few dominant paths, leading to sparse representations of the channel in the space-delay domain. This sparsity allows the AI model to concentrate on significant signal features while effectively filtering out noise and irrelevant artifacts.

To ensure robustness across diverse channel conditions, we adopt a dual-loss training strategy: the loss function encourages precision in high-SNR regimes using normalized root mean squared error (NRMSE), while promoting stability in low-SNR case using normalized mean squared error (NMSE). This combination balances learning across signal quality variations and prevents the model from overfitting to either extreme.

After training, the AI model is optimized and converted into a lightweight software module capable of executing SRS-CE in real time. This module is integrated into the vDU, where it works in tandem with the massive MIMO unit (MMU) to facilitate downlink precoding. Figure 4 outlines the key operations carried out by the vDU and MMU in the downlink chain, ranging from AI-powered CE to zero-forcing precoding for the S24 UE.

Figure 4. AI-SRS-CE application & DL beamforming

3-2. AI based SRS Channel Estimation Performance Evaluation

After integrating the AI-based channel estimator into the software stack, we conduct a performance evaluation using Samsung’s commercial-grade lab testbed, as illustrated in Figure 2. The lab assessment includes both conducted testing and NMSE analysis using captured SRS signals. The testbed operates in C-band allocation within the campus, with a bandwidth of 100MHz for the experiments.

To assess the effectiveness of the AI-SRS-CE method, we measure NMSE performance using conducted tests based on CDL-C and CDL-D channel profiles. Using channel samples collected in the receiver, we compare the CE accuracy between link-level simulation (LLS) outcomes and real-time measurements. As shown in Figure 5, the AI-SRS-CE implemented in the testbed from Figure 2 delivers up to 12dB NMSE improvement in the CDL-D scenario and 10dB gain in CDL-C. These results align closely with simulation trends, confirming the consistency of the AI model across both emulated and physical layers.

Figure 5. NMSE improvement from AI SRS CE (lab signal samples & comparison with LLS simulation)

4. AI-based Uplink Enhancement

Figure 6. Application of AI-SRS-CE for UL pre-combining

In addition to downlink enhancement, we can also apply AI-SRS-CE to uplink signal processing purpose—specifically to enable more efficient uplink pre-combining at the cell site. As illustrated in Figure 6, based on the denoised SRS signal, the MMU can perform RX port adaptation and uplink pre-combining before forwarding the full-port (i.e., burdensome) signal to vDU. This approach significantly reduces the number of active RX ports and the data volume sent over the fronthaul, which is particularly important in massive MIMO systems where high antenna counts can otherwise create substantial transport and processing overhead. By executing intelligent pre-combining near the edge, the network offloads computational burden from the vDU and enables scalable, AI-assisted uplink processing.

5. AI Channel Estimation to Real World

To assess the real-world effectiveness of AI-SRS-CE, we extend our evaluation beyond controlled lab environments and perform outdoor OTA field measurements using Samsung’s commercial-grade testbed. OTA measurements offer a more direct reflection of system behavior and facilitate the continuous refinement of the AI model through multiple test trials. Since SRS-CE directly impacts downlink beamforming quality, its accuracy plays a vital role in determining user throughput. Thus far, we have seen that AI-SRS-CE has outperformed traditional SRS-CE for both LoS and non-line-of-sight (NLoS) channel conditions, particularly achieving a substantial 23% increase in throughput under NLoS conditions in low SNR regions.

6. Conclusions

In order to move toward AI-native RAN, real deployment and validation of AI features in the network are essential—not just simulations or isolated demos. This blog explored one such effort: applying AI to real-time CE at the cell site. Through the development and test of AI-SRS-CE, we showed measurable gains in both lab and field settings, confirming that AI can bring practical benefits even at the physical layer. It is a small but concrete step in making AI-native RAN a reality. In addition to downlink processing, AI-based uplink enhancement such as pre-combining has also been researched. We expect further validation through over-the-air tests to help accelerate their integration into real-world deployments, bringing the AI-native RAN vision closer to commercialization.

References

[1] Y. Li, Y. Hu, K. Min. H. Park, H. Yang, T. Wang, J. Sung, J.-Y. Seol, C. J. Zhang, “Artificial intelligence augmentation for channel state information in 5G and 6G,” IEEE Wireless Communications, vol. 30, no. 1, pp. 104-110, Feb. 2023.

[2] M. Soltani, V. Pourahmadi, A. Mirzaei, and H. Sheikhzadeh, “Deep learning-based channel estimation,” IEEE Commun. Lett., vol. 23, no. 4, pp. 652-655, Apr. 2019.

[3] Samsung Networks YouTube, “Unleash the full power of AI and automation with Samsung's AI-powered Network l” in MWC 2025 https://www.youtube.com/watch?v=_To_XytB6nw

[4] L. Li, H. Chen, H.-H. Chang, L. Lio, “Deep residual learning meets OFDM channel estimation,” IEEE Wireless Commun. Lett., vol. 9, no. 5, pp. 615-618, May. 2020.