Communications

5G Media Standards: Ultimate Communication beyond Media Streaming

Moving towards Immersive Media

The high data speed and the extremely low-delay connection of 5G enables various interactive immersive media services and this hyper-realistic media service is one of the killer services of 5G. Immersive media including AR (Augmented Reality) and MR/XR (Mixed Reality/eXtended Reality) provides a truly unique experience to our consumers in both their lifestyle and media consumption behaviour. From now, immersive media will be augmented into our real environments and new device types such as AR glasses will be at the forefront for consuming future media.

The three major QoE parameters of XR services are Immersion, Presence and Interaction delay. Immersion can be represented as Resolution such as 4K, 8K and 3D background and objects imitating real environments etc. Presence is measured using MTP (Motion-to-Photon), which is defined as the amount of time between the user’s head movement and its corresponding display output reflections on an HMD. If MTP time is too long, users may feel uncomfortable, giving an unnatural experience due to the mismatch of perceptivity, similar to VR sickness. Interaction delay is about the time between a user’s action and the result being reflected in the content, for example, if the user moves an apple, after an Interaction delay the user can see the apple rolling. During the last century, the main theme of media has been Immersion, reflected through the user consumption of higher resolution media using bigger screen sizes. In the recent decade, there has been a lot of active researches in Presence and Interaction delay for XR, both from a media processing perspective, and also in the H/W development of VR HMDs and AR glasses. In order to reflect these movements and trends, these two parameters can be implemented and realized using 5G, since the MTP time requirement is less than 20ms in the case of VR (and more or less also for AR), and 5G is the only infrastructure which can satisfy such a value and have wide coverage.

Evolution of 3GPP Standards for Mobile Media

For satisfying these requirements, 3GPP SA4, the media service working group of 3GPP, is establishing the roadmap of XR and it will be the main theme of Rel-18.

5G media standardization status in 3GPP SA4

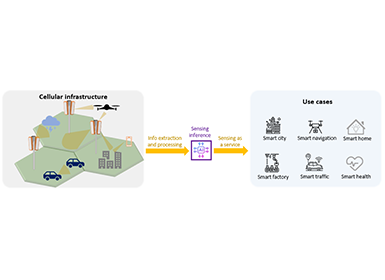

In order to better follow the trends of mobile media, it is helpful to understand the recent history of 3GPP SA4 and the plans for XR in upcoming Rel-18. The figure above presents the major works of SA4 from LTE and 5G till now. After 5G NR (New Radio), SA4 defined a 5G streaming specification derived from Rel-15, integrating the 5G architecture. And in parallel, SA4 started to define XR from a collection of use cases, identifying requirements. During Rel-17 in 2020 last year, a new study for an AR/MR framework was initiated and led by Samsung. This work will be continued in Rel-18 through its related normative works, such as AR/MR call/streaming standardization. In addition, for creating and satisfying the 3 major QoE parameters of XR services (as introduced) through the network, MEC (Mobile Edge Computing) will be essential for the short delay and intensive fast processing of media; it will also be useful for split-rendering when considering the relatively limited-power of user devices. Thus, SA4 also started to consider architecture enhancements in order to include the support of MEC. The figure below shows the end-to-end architecture of AR/MR services using MEC.

General end-to-end architecture of AR/MR services

Leading 3GPP Immersive Media Standards

Samsung has been leading the relevant 3GPP standards to visualize such a future vision of technologies and holds the vice-chair position in 3GPP SA4 from August 2021. Particularly, in June 2020, Samsung initialized a new feasibility study in 3GPP SA4 Release-17 for 5G Glass-type AR/MR Devices, named FS_5GSTAR. As the rapporteur of this study, we have been closely collaborating with many industry players and have identified the key potential features for these new glass-type devices, including;

Key use cases and service scenarios Device reference architectures and service procedures KPIs and QoS/QoE factors

The AR/MR device contains various functionalities to support a variety of different AR/MR experiences, and the study identifies some common functional entities as shown in the figure below.

Common functional architecture within 5G AR/MR device

The AR Runtime provides a set of functions that interface with a platform to perform commonly required operations, such as accessing controller/peripheral states, getting current and/or predicted tracking positions, and submitting rendered frames. The Scene Manager contains a set of functions that supports the application in arranging the logical and spatial representation of a multisensorial scene based on support from the AR Runtime. The Media Access Functions enable access to media data to be communicated through the 5G system. In addition, the AR/MR device provides a set of peripherals to support physical connections to the environment and a software application.

As an outcome of FS_5GSTAR, the technical report of 3GPP TR 26.998 is expected to be published in November 2021. It also provides recommendations for the potential normative works for Release-18 standards in 3GPP, including immersive AR/MR media codecs/profiles, integration into the 5G media streaming architecture, and/or relevant interfaces with 5G edge. Through these works, it is expected that a series of Release-18 technical standards will be triggered to develop the integration of glass-type AR/MR devices into 5G networks.

Beyond Immersive Experiences: 6G Media Standards

The keyword of 6G media standards would be “Immersive Communication”.

The whole world has been stuck due to COVID-19. From meeting friends and family, having meetings for working between colleagues, to joining yoga classes, everyone has had to meet using online communication application like Zoom. A lot of us do not feel that this kind of video conferencing is “real” communication. One of the main features of 6G media would be the “Kingsman’s meeting room”. Through a pair of AR glasses, we can see people sitting around the table and talking to each other. Their faces look realistic and their reaction and gestures can be seen in real-time. The AR object representing each person in the meeting is the same size as their actual human, and they are perfectly augmented to the meeting room, chairs and table. Other participants can also see and talk to each other in a similar experience. Unlike in a Zoom meeting, we won’t experience the eye fatigue of looking and focusing at every person individually one by one.

After Rel-18, towards 6G, the extension of Immersive media specifications in SA4, such as AR call/streaming/format/MEC, can be expected. For better quality of media, AI/ML can be used in a network-assistant manner, and it will also provide ambience effects to Immersive media service. Furthermore, new experiences can be expected from holograms, a new media format providing free-space immersive experiences in 6G. Such immersive environments are able to enhance our experience similar to that of a real face-to-face meeting, and it is what we call “Immersive Communication”.

We are looking forward to the enabling of Immersive communication in 6G, allowing people to feel that they are together, that they are in the place that they want to be, and that they are really meeting the people they want to meet, even in this global pandemic, since it is the ultimate role of "Communication".