AI

Network GDT: GenAI-Based Digital Twin for Automated Network Performance Evaluation

1. Introduction

Telecommunication networks are evolving rapidly, moving beyond 5G (B5G) into a world of hyper-connectivity, intelligent automation, and immersive services. With billions of connected devices and surging data traffic, operators must constantly introduce new AI/ML-based features to keep networks stable and efficient.

But here lies the challenge: How do we evaluate new features reliably before deploying them in live networks? Traditional methods rely heavily on manual testing or direct field trials. These approaches are slow, costly, and risky, with potential service disruptions, unmet SLAs, and ballooning operational expenses.

This is where Network GDT (Generative AI-based Digital Twin) comes into play: an automated, scalable, and intelligent evaluation platform that leverages the power of Generative AI and Digital Twin technology to ensure robust AI/ML solutions for future networks.

2. Problem Statement

Traditional performance evaluation methods in telecom face several bottlenecks:

3. Motivation

As the industry shifts to B5G, the need for intelligent automation has never been greater. The O-RAN Alliance has already paved the way by embedding AI/ML into RAN operations. However, operators still lack a scalable, automated platform that can:

This motivated the development of Network GDT, a next-generation framework that unites Generative AI with dynamic digital twins for proactive performance evaluation.

4. Proposed Solution: Network GDT

To solve these challenges, we propose Network GDT, a platform that integrates conditional Generative Adversarial Networks (cGANs) with a novel Digital Twin Augmenting Condition (DTAC) mechanism.

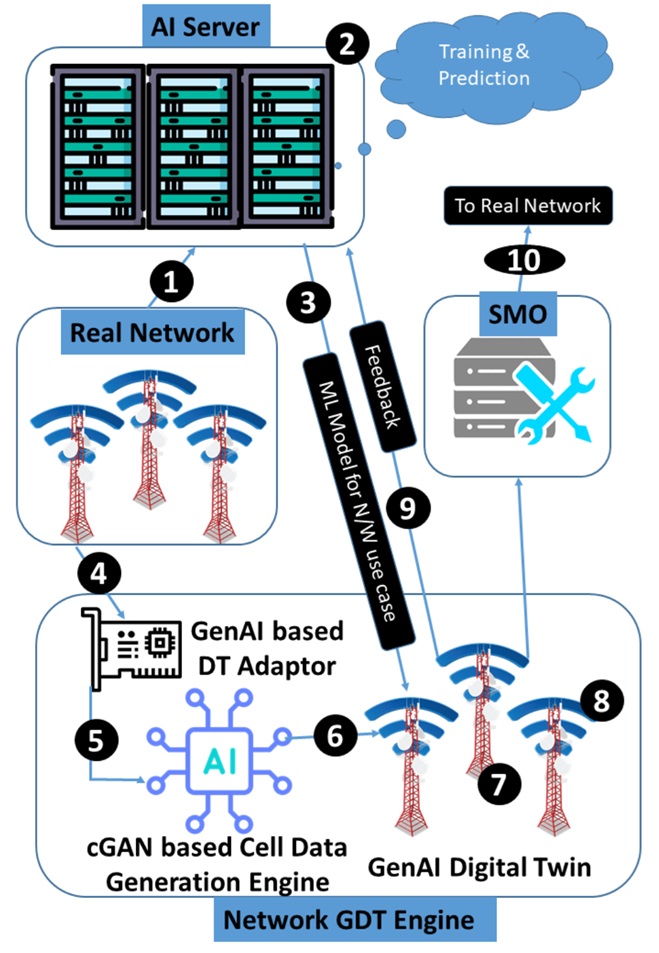

Figure 1. System Model for evaluating AI models using Network GDT: This figure illustrates the stages of the Network GDT solution

A. System Model

The platform works as a bridge between AI model development and real-world deployment.

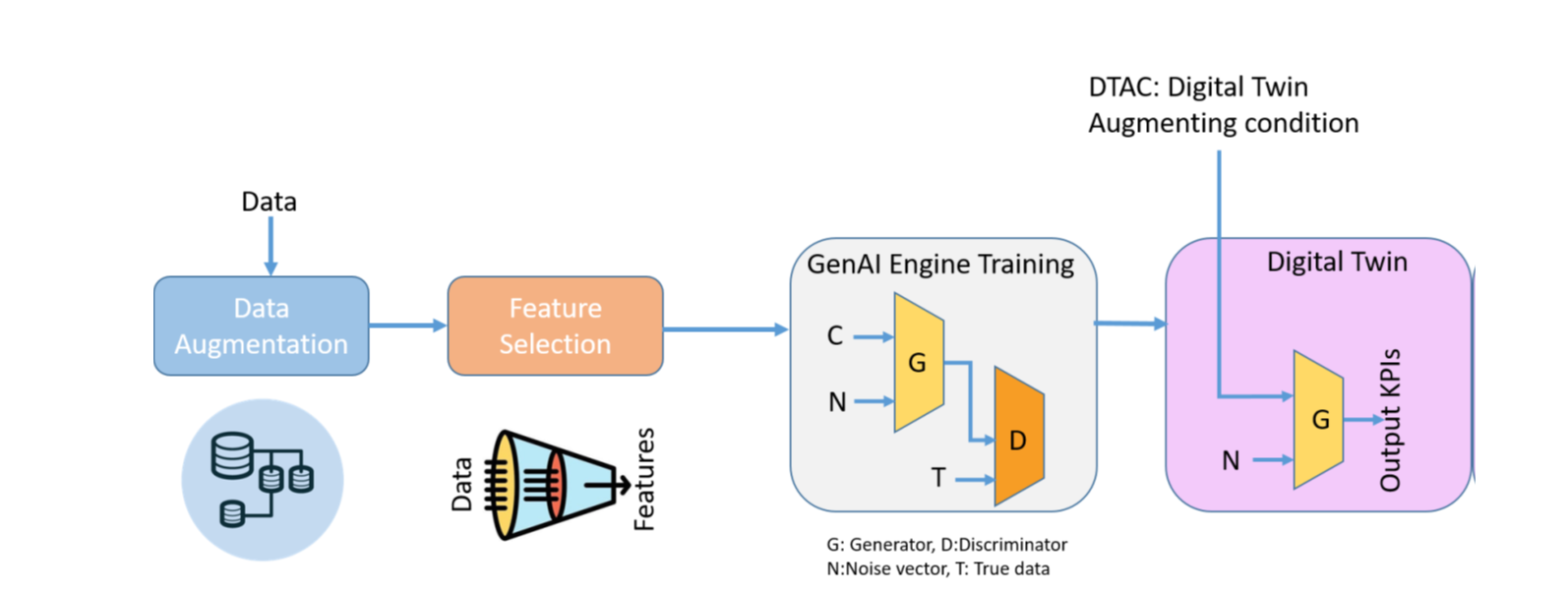

B. GenAI-Powered Digital Twin

The cGAN engine lies at the heart of the system:

This ensures adaptability, so the digital twin can remain relevant as networks evolve without starting training from scratch.

C. Training Workflow

Figure 2. Network GDT Block Diagram: This diagram outlines the key components of the Network GDT process, including data augmentation, feature selection, GenAI training, and the final generation of the dynamic digital twin (GDT)

D. Dual-Phase Evaluation Strategy

Network GDT ensures safe and reliable deployment through two phases:

This strategy minimizes downtime, optimizes performance, and reduces risks.

5. Experimental Setup

Dataset:

Training:

6. Results

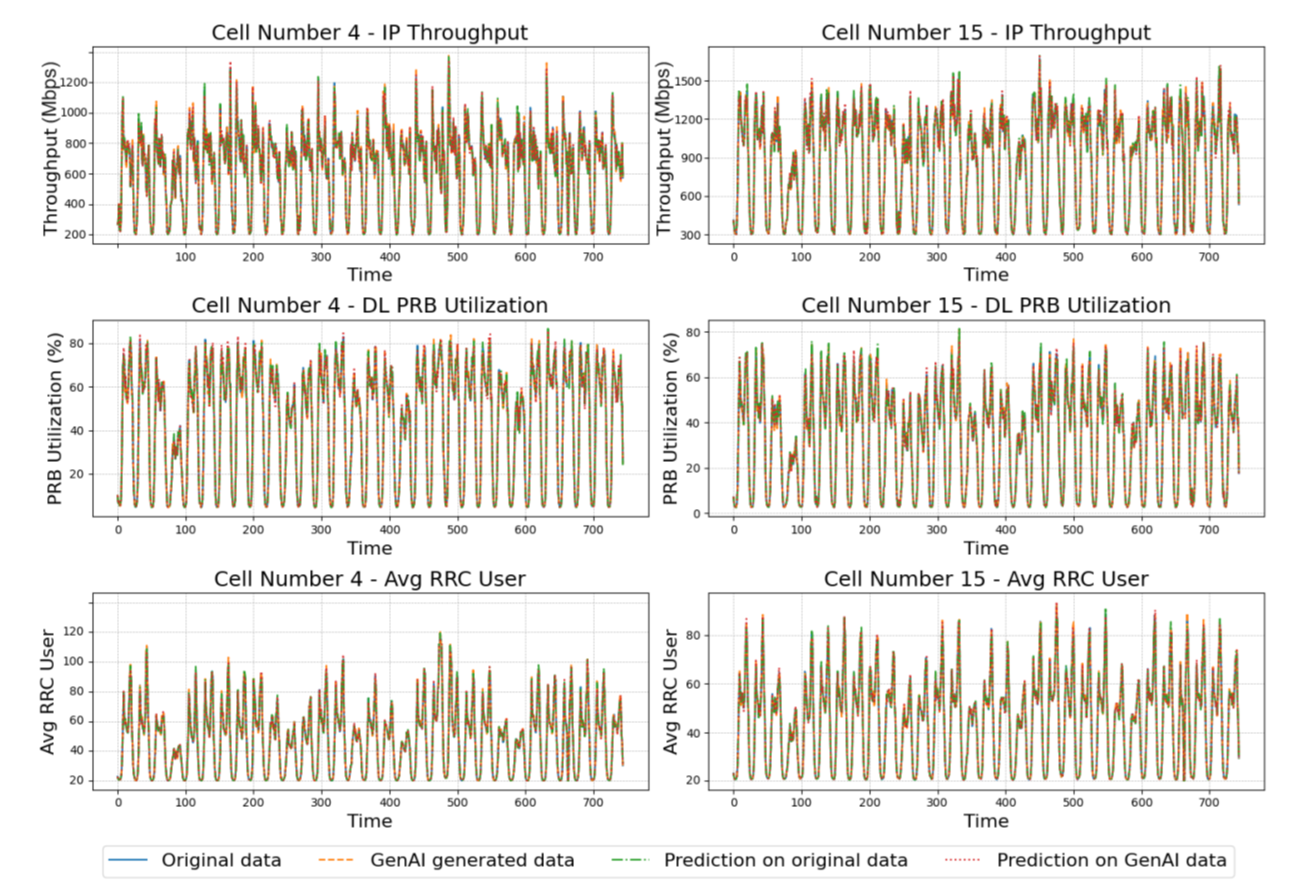

Figure 3. Comparison of Original Data, GenAI Engine Generated Data, Predictions on Original Data, and Predictions on GenAI Engine Generated Data

This demonstrates that AI models tested on GDT generated data can be safely trusted for real deployments.

7. Conclusion

The proposed Network GDT platform transforms how telecom operators evaluate new features. By combining Generative AI with Digital Twins, it delivers:

As B5G networks expand, solutions like Network GDT will be key to enabling fast, safe, and intelligent innovation in telecom. Network GDT makes future networks smarter, safer, and more efficient, before they even go live.

References

[1] W. Wang, X. Chen, and J. Zhu, “AI in Wireless Communications: A Financial Perspective,” IEEE Transactions on Wireless Communications, vol. 19, no. 3, pp. 1976–1988, 2020.

[2] A. A. Khan, A. A. Laghari, A. M. Baqasah, R. Alroobaea, T. R. Gadekallu, G. A. Sampedro, and Y. Zhu, “ORAN-B5G: A next generation open radio access network architecture with machine learning for beyond 5G in industrial 5.0,” IEEE Transactions on Green Communications and Networking, 2024.

[3] M. Mirza and S. Osindero, “Conditional Generative Adversarial Nets,” in Proceedings of the 32nd International Conference on Machine Learning (ICML 2014), 2014.

[4] P. Isola, J.-Y. Zhu, T. Zhou, and A. A. Efros, “Image-to-Image Translation with Conditional Adversarial Networks,” in proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017.