AI

From Cradle to Cane: A Two-Pass Framework for High-Fidelity Lifespan Face Aging

1 Introduction

Existing face aging methods struggle with an inherent Age-ID trade-off, prioritizing either realistic aging transformations at the cost of identity preservation or vice versa, particularly across large age spans. This imbalance stems from unified frameworks attempting to model the entire lifespan. To address this limitation, we propose Cradle2Cane, a novel two-stage diffusion framework built upon the fast-inference SDXL-Turbo model. The first stage focuses on precise age control via textual prompts describing age and gender attributes, employing an Adaptive Noise Injection (AdaNI) mechanism. AdaNI dynamically scales the injected noise based on the magnitude of the target age transformation, naturally accommodating better identity retention for smaller changes and enabling stronger edits for larger age gaps. The second stage explicitly reinforces identity consistency. It conditions the model on dual identity-aware embeddings (IDEmb) – SVR-ArcFace and Rotate-CLIP – applied to a minimally perturbed version of the first stage's output, preserving the achieved aging characteristics while enhancing identity fidelity. Both stages are jointly trained end-to-end. Comprehensive evaluations on CelebA-HQ using Face++ and Qwen-VL protocols demonstrate that Cradle2Cane achieves a superior balance in the Age-ID trade-off compared to diverse GAN and diffusion baselines, while maintaining inference speeds comparable to GANs. Furthermore, leveraging the robust generative capacity of text-to-image diffusion, Cradle2Cane exhibits enhanced performance on challenging in-the-wild images, significantly broadening its practical applicability.

2 Method

2.1 Overall Architecture

To address the challenge of controllable and identity-preserving face aging, we propose a two-pass framework, Cradle2Cane, built upon the efficient SDXL-Turbo model. In the first stage, we perform adaptive noise injection (AdaNI) on the inputface image $x_a$, guided by age-specific embedding, to generate an intermediate image $\widehat {x_b}$ that reflectsthe target age $b$. This step aims to synthesize realistic aging effects while maintaining essentialidentity traits. However, for large age gaps, $\widehat {x_b}$ may exhibit partial identity drift due to the strong agetransformation. To compensate for this, the second stage focuses on enhancing identity consistency. A lower magnitude of noise is injected into $\widehat {x_b}$, and identity-aware embeddings (IDEmb) conditioningis applied using features extracted from the original input image. This results in the final output faceimage $x_b$, which exhibits both faithful aging effects and high identity preservation.

Figure 1. Our method Cradle2Cane consists of two passes: the first pass employs adaptive noiseinjection (AdaNI) to enhance age accuracy, while the second pass incorporates identity-awareembeddings (IDEmb), including SVR-ArcFace and Rotate-CLIP embeddings, to improve identityconsistency. During training the MLPs and UNet-LoRA modules, we jointly optimize identity lossbetween source and target face images, as well as age and quality losses over the target images

2.2 1st Pass: Adaptive Noise Injection (AdaNI) for Age Accuracy

To address the Age-ID trade-off in face aging, we empirically observed that the magnitude of facial modifications correlates with age gap size, requiring pronounced structural changes for large shifts versus minor adjustments for small ones. Building on prior evidence that injected noise controls editing flexibility, we systematically analyzed noise levels (z1-low, z2-medium, z3-high) across 100 face images under age shifts from 1 to 60 years. Results showed lower noise (z1) enhanced identity preservation but failed in age accuracy for large gaps, while higher noise (z3) improved age realism but compromised identity fidelity, confirming a clear trade-off governed by noise intensity. Motivated by this, we propose an adaptive noise injection (AdaNI) strategy that dynamically modulates noise based on target age shift. Specifically, we encode predefined age prompts via CLIP text encoding for cross-attention conditioning and categorize age magnitudes into three tiers (using 5- and 20-year boundaries from quantitative analysis), assigning z1 for shifts ≤5 years, z2 for 6-20 years, and z3 for >20 years during initial noise injection. After diffusion processing yields the latent code, VAE decoding reconstructs an intermediate aged face with high age accuracy but residual identity degradation, particularly for large transitions. AdaNI thus balances age realism and identity preservation, though supplementary identity refinement remains essential for full consistency.

2.3 2nd Pass: Identity-Aware Embedding (IDEmb) for Identity Preservation

To further improve identity preservation, we extract identity-aware embeddings (IDEmb) from the source face $x_a$ using both ArcFace and CLIP encoders, which are standard features for measuring and guiding identity information. A central challenge in this process is the inherent entanglement between age and identity within these embeddings—both ArcFace and CLIP features tend to encode age-related cues alongside identity information. To overcome this limitation, we propose two novel embedding modules: SVR-ArcFace and Rotate-CLIP. These modules are designed to explicitly suppress age-related components within their respective embedding spaces, thereby disentangling identity from age.

2.3.1 SVR-ArcFace

Given a source face image $x_a$, we generate a set of $n$ aged face images $\{x^i_b \}^n_{i=1}$ by injecting differentnoise levels in the first stage. These images share the same identity as xa but exhibit different agecharacteristics. Inspired by prior works, which suggest that applying Singular ValueDecomposition (SVD) followed by singular value reweighting (SVR) can enhance shared featureswhile suppressing divergent ones such as age, we propose a singular value reweighting technique torefine identity features from the ArcFace embeddings. We refer to this method as SVR-ArcFace

2.3.1 Rotate-CLIP

Given a source face image $x_a$ with source age a and target age $b$, we extract the CLIP image embedding $i_a=I_{CLIP}(x_a )$, along with the text embeddings $t_a=T_{CLIP}(a)$ and $t_b=T_{CLIP}(b)$, using the pretrained CLIP image encoder $I_{CLIP}(∙)$ and text encoder $T_{CLIP}(∙)$. Our goal is to shift the age-related component in $i_a$ toward the target age domain in CLIP space, leveraging CLIP’s joint visual-textual alignment. A common approach is to compute the age shift vector as the difference of text embeddings:

$∆=t_b-t_a$

However, this simple subtraction may introduce semantic inconsistencies due to CLIP’s coarse age representations. To address this, we propose a rotational projection using spherical linear interpolation (slerp), which more smoothly captures semantic transitions between ages:

$∆^*=slerp(t_b,t_a,λ)$

where $λ∈[0,1]$ controls the interpolation. The Rotate-CLIP embedding is then defined as:

$\widehat {i_a}=i_a+∆^*$

which shifts $i_a$ toward the target age direction while preserving other identity-related information. The refined identity embeddings $\widehat {u_a}$ and $\widehat {i_a}$, obtained from SVR-ArcFace and Rotate-CLIP, are projected through two MLPs to align with the text-embedding feature dimension, then concatenated to form IDEmb before injected into the cross-attention module of SDXL-Turbo:

$\widetilde {u_a}=MLP_u(\widetilde {u_a}),\widetilde {i_a}=MLP_i(\widehat {i_a})$

3 Results

3.1 Quantitative Comparison

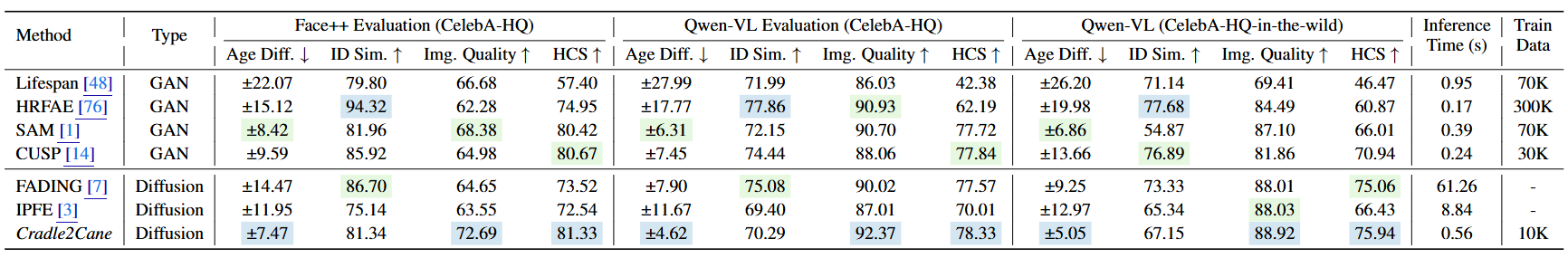

As demonstrated in Table 1, Cradle2Cane consistently outperforms existing face aging methods in both the Face++ and Qwen-VL evaluations across the CelebA-HQ and CelebA-HQ (in-the-wild) datasets. It achieves the lowest age estimation error, the highest image quality scores, and the best HCS values, while maintaining competitive identity preservation. Notably, Cradle2Cane achieves these results with a relatively small training set (10K) and a fast inference time (0.56s). These results underscore the effectiveness and efficiency of our framework in balancing gaging realism, identity consistency, and visual quality across diverse evaluation protocols.

Table 1. Quantitative comparison using both Face++ and Qwen-VL evaluation protocols on CelebA-HQ and CelebA-HQ (in-the-wild) test dataset. We calculate the age accuracy, identity preservation, image quality and the Harmonic consistency score (HCS) to compare with existing face aging methods. Best results are marked in blue, and second-best in green

3.2 Qualitative Comparison

Figure 2 presents a visual comparison of face aging results betweenCradle2Cane and recent GAN- and diffusion-based baselines. Compared to other methods, our approach demonstrates more realistic aging transitions with consistent identity preservation across all age ranges. In contrast, prior methods often exhibit texture artifacts, age realism issues, or identity shifts, particularly at extreme ages. Our method, however, produces natural skin aging, hair graying, and structural changes, reflecting a superior modeling of facial aging patterns. These results emphasize the visual fidelity and robustness of our framework.

Figure 2. Qualitative comparison with existing face aging methods across lifespan ages. Our methodCradle2Cane is even able to imitate the natural hair change while the previous methods cannot

4 Conclusion

In this work, we tackle the fundamental challenge of achieving both age accuracy and robust identity preservation in face aging—a problem we term the Age-ID trade-off. While existing methods often prioritize one objective at the expense of the other, our proposed framework, Cradle2Cane, introduces a two-pass framework that explicitly decouples these goals. By leveraging the flexibility of few-step text-to-image diffusion models, we introduce an adaptive noise injection (AdaNI) mechanism for fine-grained age control in the first pass, and reinforce identity consistency through dual identity-aware embeddings (IDEmb) in the second pass. Our method is trained end-to-end, enabling high-fidelity, controllable age transformation across the full lifespan, while significantly improving inference speed and visual realism. Extensive evaluations on CelebA-HQ confirm that Cradle2Cane achieves new state-of-the-art performance in terms of both age accuracy and identity preservation. In addition, Cradle2Cane demonstrates strong generalization to real-world scenarios by effectively handling in-the-wild human face images, a setting where existing methods often fail.