Communications

Enabling Intelligent RAN Framework in O-RAN

Background

The O-RAN Software Community (O-RAN SC) [1] is a collaborative effort between the O-RAN Alliance and the Linux Foundation to support the reference software implementation of the architectures and specifications created by the O-RAN Alliance Working Groups. Samsung Research has been steadily increasing its efforts within the O-RAN SC since 2019 by contributing to the Radio Intelligent Controller (RIC) and RICAPP projects [4]. Then, earlier this year, Samsung Research became a member of the Technical Oversight committee (TOC), the top decision-making group of O-RAN SC [5]. As a TOC member, we proposed a project to develop an AI/ML framework (AIMLFW), which seamlessly integrates into the O-RAN architecture. The project was motivated by the study presented in the technical report by O-RAN alliance Working Group 2 (WG2) dealing with Non-Real-Time (NRT) RIC [2]. The proposal was formally accepted in August 2022 and the first version is planned to be a part of the “G” release of O-RAN SC. The main goal of AIMLFW project is to create an end-to-end framework, which manages and performs AI/ML-related tasks including training, model deployment, inference, and model management.

O-RAN AI/ML Workflow and AIMLFW Project

Figure 1. ML components and terminologies in O-RAN Alliance specification [2]

O-RAN Alliance WG2 has broadly defined required functions, components, and terms related to the AI/ML workflow as depicted in Fig. 1. As part of the technical report [2], the AI/ML general lifecycle procedure and interface framework include the following key phases functions.

- ML model and inference host capability query / discovery

- ML model generation / training / selection

- ML model deployment and inference

- ML model performance monitoring

- ML model retraining update / reselection and termination

The above listed functions needed to be considered when designing the architecture for the AIMLFW project. The deployment of various functionalities of the AIMLFW will vary based on the potential use cases. The technical report [2] suggests some typical deployment scenarios as listed below that can be considered for AI/ML architecture and framework in the O-RAN architecture.

Deployment Scenarios:

While the O-RAN Alliance WG2 defines the AI/ML workflow and deployment scenarios, there is no detailed specification, which defines the detailed interfaces involved during the different phases in the workflow. Samsung Research has already developed a proof-of-concept AI/ML framework, which performs training, model management, and inference considering various RAN control optimization use cases [11], [12]. With this reference architecture, Samsung will contribute a basic AI/ML framework into the “G” release of O-RAN SC. Samsung believes that this framework will lay a foundation for many developers to contribute to the framework in the future. The AIMLFW project can also act as a bridge to provide meaningful implementation insights to the AI/ML workflow-related specification teams within O-RAN Alliance.

Goals and Plans

O-RAN SC is currently preparing for G release, its seventh official SW release, targeting the release in December 2022. The AIMLFW project is planned to be a part of G release and will introduce the basic modules and functions necessary for the O-RAN AIML workflow.

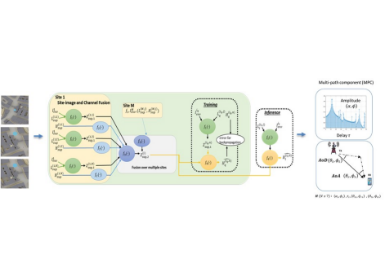

Figure 2. AIMLFW architecture

The proposed architecture, shown in Fig. 2, consists of an AI Training Host Platform for the execution of model training pipelines, an AI Inference Host Platform for providing model inference results with a trained model, and AI Management Functions. The Training Host Platform requires a Training Platform Service (TPS) for model training, and we used Kubeflow Pipeline [6] for the purpose in the initial version. Kubeflow project is to make deployments of ML workflows on Kubernetes. Kubeflow Pipeline defines the workflow of modules related to ML model training that must be performed one after another from data preprocessing to model validation. When this pipeline is deployed and executed, model training is performed using data specified in a predetermined order, which enables model training wherever desired. The Data Broker and the Feature Store in the Training Host Platform is required to deliver the data necessary for training to the pipeline. The model storage is required to store the trained model after the pipeline is executed.

Figure 3. Examples of image-based and file-based ML model deployment

In the initial version of AIMLFW for G release, the model serving is manually performed since our architecture is ML model file-based deployment. Fig. 3 shows examples of ML model deployment options introduced in WG2 technical report [2]. There are two types of model serving methods: image-based and file-based. Compared to general Apps, ML Apps, which are based on file-based approach, requires an additional serving platform to deploy the ML models and perform inference. In order to process large-capacity inference requests in a short time, inference services must be easily scalable and need to balance the loads of the incoming requests more efficiently. Therefore, we used KServe [7] for Inference Platform Service (IPS) to serve models on RIC platform. KServe is also an open source based on Kubernetes, and can deploy ML model as an ‘inference service’ by defining a Kubernetes CRD (Custom Resource Definition) [8]. KServe supports auto-scaling of inference services and load balancing of the data to distributed ML apps using Istio [9] and KNative [10].

In order to utilize all of these useful features of KServe, we have adopted file-based deployment of ML Apps instead of packaging the ML model as a container image. This architecture requires Assist Apps to implement an end-to-end solution for AI/ML use cases as shown in Fig. 2. The Assist Apps support the ML Apps to communicate with other Apps or platform modules for retrieving data required for inference and for providing decision logic and actions required for the use case apart from AI/ML inference. As part of AIMLFW, adapters are provided to avoid any dependency to specific open source project, and for the scalability of our framework as well.

The current architecture of the AIML framework is in the initial stage and it will be continuously reviewed and enhanced as we progress in the AIMLFW project. Some missing parts of the current initial version such as interworking with DMS (Deploy Management Service) in O-Cloud and interactions with other O-RAN components will be filled through our activities in the open source community as the AI/ML-related specifications in O-RAN Alliance gets defined.

We believe that AI/ML will be a key component of future intelligent RAN. Given that, we are very excited about the on-going AIMLFW project and hope that our current efforts would be a great seed for the lush trees in the future.

Link to the paper

https://ieeexplore.ieee.org/document/9367572https://ieeexplore.ieee.org/document/9681936

References

[2] AI/ML Framework - AI/ML Framework - Confluence (o-ran-sc.org)

[5] https://github.com/kserve/kserve

[6] https://kubernetes.io/docs/concepts/extend-kubernetes/api-extension/custom-resources/

[9] H. Lee et al., “Hosting AI/ML Workflows on O-RAN RIC Platform”, 2020 IEEE Globecom Workshop, 2020.