AI

Towards LLM-Based Automatic Boundary Detection for Human-Machine Mixed Text

1. Introduction

Large Language Models (LLMs), particularly the debut of ChatGPT, have made significant advancement and demonstrated the ability to produce coherent and natural-sounding text across a wide range of applications. However, the proliferation of generated text has raised concerns regarding the potential for misuse of these LLMs. One major issue is the tendency of LLMs to produce hallucinated content, resulting in text that is factually inaccurate, misleading, or nonsensical. Inappropriate utilization of LLMs for text generation purposes. Therefore, accurately distinguishing between human-authored and machine generated texts is crucial in order to address these challenges effectively.

This study addresses the challenge of token-level boundary detection in mixed texts, where the text sequence starts with a human-written segment followed by a machine-generated portion. The objective is to accurately determine the transition point between the human-written and LLM-generated sections. To achieve this, we frame the task as a token classification problem and harness the robust capabilities of LLMs to tackle this task. Through experiments utilizing LLMs that excel in capturing long-range dependencies, we demonstrate the effectiveness of our approach. Notably, by leveraging an ensemble of multiple LLMs, we achieved first place in Task 8 of SemEval’24 competition.

2. Method

The task is defined as follows: for a hybrid text < w1,w2, ...,wn > with a length of n that includes both human-written and machine-generated segments, the objective is to determine the index k, at which the initial top k words are authored by humans, while the subsequent are generated by large language models (LLMs). The evaluation metric for this task is Mean Absolute Error (MAE). It measures the absolute distance between the predicted word and the actual word where the switch between human and machine occurs.

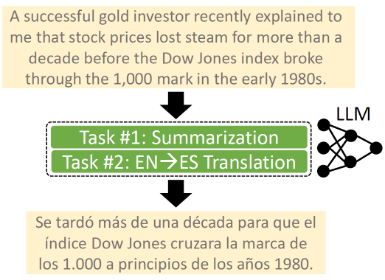

Figure 1. Framework of this paper

We explore LLMs’ capability to detect boundaries for human-machine mixed texts. The framework of this paper is shown in Figure 1. Given the labeled dataset containing boundary indices, we first map these indices to assign each token a label denoting whether it originates from human writing or LLM generation. Subsequently, we harness the capabilities of LLMs by fine-tuning them for the task of classifying each token’s label. To enhance performance further, we employ an ensemble strategy that consolidates predictions from multiple LLMs. Additionally, we explore various factors that impact the effectiveness of LLMs in boundary detection.

2.1 LLMs Supporting Long-range Dependencies

Our objective is to facilitate boundary detection in long text sequences, thereby necessitating the utilization of LLMs capable of handling long-range dependencies. Within this study, we investigate the performance of Longformer, XLNet, and BigBird models on boundary detection. Longformer utilizes a combination of global attention and local window-based attention mechanisms, which enables the model’s capability to capture both short-range and long-range dependencies effectively. XLNet introduces a new training objective called permutation language modeling, and considers all possible permutations of the input tokens during training. This allows XLNet to capture bidirectional context more effectively and mitigate the limitations of autoregressive models. BigBird introduces a novel sparse attention mechanism that allows the model to scale to longer sequences while maintaining computational efficiency.

2.2 Exploration of Potential Factors

Except for the direct usage of LLMs, we investigate factors that may influence the capability of LLMs in boundary detection task.

While LLMs demonstrate remarkable proficiency in comprehending semantics and generating coherent text, the addition of supplementary layers on top of LLMs has the potential to yield further improvements for downstream tasks. Therefore, we evaluate the impact of additional layers, such as LSTM and CRF, when integrated with LLMs, to ascertain their potential contributions to enhancing performance in boundary detection tasks.

Token classification involves assigning specific categories to individual tokens within a text sequence based on their semantic content. Typically, evaluation metrics gauge the average accuracy of category assignments across all tokens. However, in the context of boundary detection, the labels of tokens situated at or in proximity to the boundary hold greater significance. To bridge this gap, we introduce loss functions capable of assessing segment accuracy for both the human-written and machine-generated segments, such as the dice loss function. These loss functions, commonly utilized in image segmentation tasks, are anticipated to enhance performance in boundary detection tasks.

Within the competition, a total of 4,154 cases are presented for this task. The remaining sub-tasks revolve around human-machine text classification. A natural idea is to initiate pretraining utilizing the text classification data, followed by fine-tuning on the boundary detection data to enhance the model’s overall generalization capability. Two distinct pretraining approaches are employed. In the Pretrain 1, human-written texts and machine-generated texts are concatenated to form a new boundary detection dataset in sentence level. Within this novel dataset, boundaries are identified at the juncture where human-written and machine-generated sentences merge. In the Pretrain 2, a binary text classification model incorporating both an LLM and a linear layer atop the LLM is initially trained. Subsequently, the weights of the LLM are utilized for fine-tuning in the boundary detection task.

3. Results

3.1 Performance of different LLMs

We investigate three LLMs renowned for their ability to handle long-range dependencies: Longformer, XLNet, and BigBird. We exclusively employ the large versions of these models. Moreover, as a benchmark for the competition, we utilize Longformer-base. The performance metrics of these four models are outlined in Table 1.

Table 1. Performance of varied LLMs

Table 2. Performance of XLNet ensemble

We observe that Longformer-large outperforms Longformer-base, owing to its increased parameter count. Among the four algorithms, XLNet achieves the best performance, with an MAE of 2.44. This represents a reduction of 31.84% compared to Longformerlarge and a substantial 58.71% decrease compared to BigBird-large. One potential explanation is that the consideration of all possible permutations of input tokens during training help XLNet capture bidirectinal context more effectively.

The winning approach is founded on ensembles of 2 XLNet with varied seeds. It involves a simple voting process of the output logits from the diverse XLNet models. As shown in Table 2, the voting strategy results in a decrease in MAE from 2.44 to 2.22.

3.2 Performance of LLMs with extra layers

We select Longformer-large as our baseline model and examined the impact of incorporating extra LSTM, BiLSTM, and CRF layers on boundary detection. The experimental results are detailed in Table 3. Integration of LSTM and BiLSTM layers with Longformer leads to significant improvements, with a decrease in MAE by 10.61% and 23.74%, respectively. Conversely, the addition of a CRF layer to Longformer-large yields unsatisfactory results. One plausible explanation could be the lack of clear dependencies between the two labels (0 and 1).

Table 3. Performance of adding extra layers

Table 4. Performance of different segment loss functions

3.3 Performance with segment loss functions

We investigate the impact of employing segment loss functions commonly utilized in image segmentation on boundary detection. The selected loss functions consist of BCE dice loss, Jaccard loss, Focal loss, Combo loss and Tversky loss. Additionally, we introduce a novel loss function BCE-MAE by simply adding BCE and MAE.

We utilize Longformer-large as the baseline, which by default adopts the binary cross-entropy loss. We explore the impact of adjusting the loss functions and present the results in Table 4. Among these variations, BCE-dice loss, Combo loss, and the BCE-MAE loss demonstrate superior performance compared to the default BCE loss.

The introduction of the Dice loss enables a balance between segmentation accuracy and token-wise classification accuracy, resulting in anticipated performance improvements. Compared to the benchmark, the MAE decreases by 12.29% and 13.69%, respectively. The BCE-MAE loss incorporates MAE loss during the training stage, aligning with the evaluation metric used in the competition. As anticipated, the MAE metric decreases by 16.48%.

3.4 Performance with segment loss functions

For both two pretraining approaches, we employ three different settings: directly utilizing Longformer-large for pretraining and fine-tuning; and incorporating additional LSTM and BiLSTM layers, respectively. Table 5 presents the results.

The results of the two pretraining approaches indicate that pretraining on either sentence-level boundary detection or the binary human-machine text classification task can enhance LLMs’ capability to detect token-wise boundaries in mixed texts.

Table 5. Performance of Longformer-large with pretraining

4. Conclusion

This paper introduces LLM-based methodology for detecting token-wise boundaries in human-machine mixed texts. Through an investigation into the utilization of LLMs, we have achieved optimal performance by leveraging an ensemble of XLNet models in the SemEval’24 competition. Furthermore, we explore factors that could affect the boundary detection capabilities of LLMs. Our findings indicate that (1) loss functions considering segmentation intersection can effectively handle tokens surrounding boundaries; (2) supplemental layers like LSTM and BiLSTM contribute to additional performance enhancements; and (3) pretraining on analogous tasks aids in reducing the MAE. This paper establishes a state-of-art benchmark for future researches based on the new released dataset. Subsequent studies aim to further advance the capabilities of LLMs in detecting boundaries within mixed texts.