Communications

AI-Driven Modem Innovations for Shaping the Future RAN

|

Samsung Research is preparing for future wireless technologies with convergence research of AI and communications, in which AI-native approaches drive designing and operations of entire RAN (Radio Access Network) and CN (Core Network). In the blog series of Communications-AI Convergence, we are planning to introduce various research topics of this application: #1. Modem I #2. Modem II #3. Protocol/Networking & Operation #4. Radio Resource Scheduler This blog will cover AI applications to channel estimation, channel prediction, and signal validation, which have significant impacts on spectral efficiency and transmission performance. |

1. Introduction

Wireless communication systems have evolved rapidly to meet the increasing demands for high data rates, low latency, and reliable connectivity. As systems transition to 5G and beyond, advanced multi-antenna and beamforming technologies have become essential to enhance spectral efficiency and user experience. A key enabler of these technologies is accurate channel estimation and prediction, which provide the transmitter and receiver with essential information about the wireless propagation environment. However, conventional model-based channel estimation approaches often suffer from performance degradation under practical conditions, such as noise, interference, and dynamic channel variations.

Recently, artificial intelligence (AI) has emerged as a promising solution to overcome the limitations of traditional channel estimation techniques [1]. AI-based methods have demonstrated the ability to learn complex channel behaviors and improve the estimation accuracy of channel state information. Therefore, we present our novel approaches to enhance accuracy of channel prediction and estimation through AI technologies for downlink (DL) and uplink (UL), respectively.

2. AI-based Downlink Enhancement

Sounding Reference Signals (SRSs) provide base stations with crucial uplink information for downlink precoding and beamforming, directly influencing system throughput and reliability. However, conventional prediction techniques are challenged by noise, interference, and the dynamic nature of wireless channels. To address these limitations, AI-based prediction of channel characteristics enables the estimation of future channel states during intervals without SRS transmissions. This approach significantly improves beamforming accuracy, optimizes resource utilization, and significantly increases downlink throughput, making AI-driven techniques highly promising for next-generation wireless networks.

AI Prediction of Channel Characteristics

Wireless communication systems often face the challenge of predicting channel characteristics at the time of scheduling, as the channel obtained through SRS are already outdated. Since the channel characteristics, including delay spread, angular spread, Doppler spread, power delay profile, and Rician K-factor, are fundamental physical-layer properties that influence wireless signal transmission and reception, accurate prediction of these characteristics is crucial for optimizing performance and improving spatial efficiency with high beamforming performance.

The challenge of estimating radio channel characteristics has been addressed in many previous studies. Existing line-of-sight (LOS) estimation techniques often compare skewness, kurtosis, and K-factor against predefined thresholds, limiting their adaptability across diverse environments due to their threshold dependency. Similarly, delay spread (DS) estimation methods, which rely on channel correlation and path selection, often struggle with robustness under noisy conditions. Moreover, all of these studies are based on current channel and do not predict future channel characteristics.

To overcome these challenges, we propose a novel AI-based wireless channel characteristics prediction framework (AI-WCP). By leveraging the interactions among multiple channel characteristics over time, AI-WCP offers superior prediction performance compared to conventional methods that estimate each characteristic separately. This approach enhances flexibility and robustness by effectively exploiting the interdependence among channel characteristics to achieve more accurate and reliable predictions.

Figure 1. AI-WCP architecture

Figure 1 illustrates the architecture of AI-WCP, consisting of two main components: multiple encoders and a neural network. The encoders extract latent representations for each channel characteristic, while the neural network simultaneously predicts all characteristics by utilizing attention mechanisms to capture their relationships. Among the various characteristics, only LOS and DS prediction accuracy is evaluated for UMi (Urban Micro) and UMa (Urban Macro) channels, as shown in Figure 2. The results demonstrate that AI-WCP achieves approximately 10% higher accuracy on average for both LOS and DS compared to existing methods.

Figure 2. Performance of AI-WCP

3. AI-based Uplink Enhancement

In uplink transmissions, Demodulation Reference Signal (DMRS) plays a vital role as the primary reference signal for channel estimation and data demodulation. However, the uplink faces several challenges, including dynamic channel variations, cell-specific propagation environments, and unpredictable transmission anomalies. To address these issues, AI-based techniques have been actively explored to improve channel estimation, environment-aware modeling, and signal validation. These approaches collectively contribute to robust and efficient uplink communication, which is essential for supporting next-generation wireless systems.

AI Channel State Estimation (CSE)

AI-based channel state estimation serves as a direct solution for improving the accuracy of DMRS-based channel estimation in uplink transmissions. This subsection focuses on how AI techniques enhance the estimation of essential channel characteristics such as delay spread and Doppler spread, which are critical for robust uplink communication.

CSE is a pivotal component of wireless communication systems, as it improves receiver performance and optimizes transmission parameters for reliable and efficient data transfer. Among the various aspects of channel state, delay spread and Doppler spread are particularly noteworthy. Traditional non-AI-based channel state estimation research primarily employs statistical methods and modeling techniques to estimate channel conditions. While these methods offer relatively simple computations and low complexity, they struggle to accurately capture the complexities and uncertainties of real-world environments. For instance, it is challenging to precisely model complex channel characteristics such as multipath fading, and rapidly changing channel states can be difficult to estimate in real-time. Additionally, these methods operate under fixed parameters and assumptions, limiting their applicability in diverse environments.

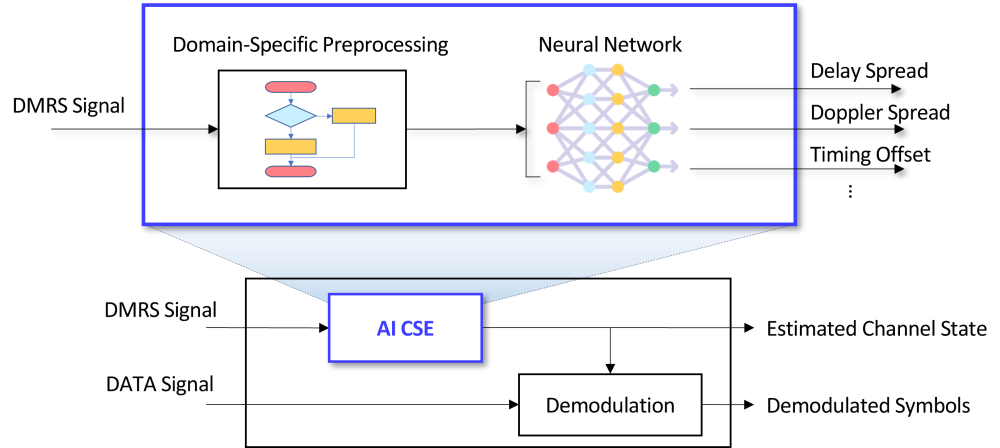

Figure 3. Real-time CSE framework

Figure 3 illustrates our proposed real-time CSE framework. To effectively estimate short-term CSE in real-time, a lightweight model is required. To achieve this, we employ an optimal preprocessing technique, which leverages domain knowledge to accurately estimate delay spread and Doppler spread. This preprocessing approach plays a crucial role in enhancing CSE performance. Our proposed real-time CSE technology enables more precise delay spread and Doppler spread estimation, leading to an SNR gain of over 1dB and a throughput improvement of more than 15%, as shown in Figure 4.

Figure 4. Performance improvement of the AI-based CSE

On-Site Generative Learning Based Distribution-Aware Channel State Estimation

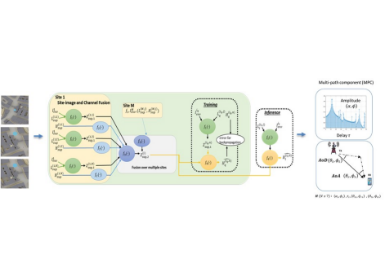

In the next-generation mobile communication system such as 5G-Advanced and 6G, efficient wireless resource management is essential to support ultra-high-speed data transmission and ultra-low-latency communications. To this end, the network needs to accurately analyze and estimate the wireless channel characteristics between the user equipment and the base station within a given cell. Wireless channel characteristic estimation has been performed using signal processing techniques and deep learning-based data analysis techniques. However, existing techniques have limitations in fully capturing the dynamic characteristics of multipath fading, interference, and mobility in complex wireless environments. Additionally, the high computational cost and data scarcity issues make real-time application challenging in many cases. The On-Site Generative AI (OSG-AI) introduced in this blog is a technology for estimating channel characteristics in wireless communication systems. Specifically, it enables more accurate estimation of wireless channel characteristics and system parameter optimization through on-site data collection and generative neural networks that learn the distribution of wireless characteristics.

Figure 5. Diverse cellular wireless environments

The OSG-AI overcomes the limitations of existing methods by leveraging on-site data collection and generative neural networks, enabling more precise channel characteristic estimation. This capability can enhance the performance of next-generation mobile communication networks. Examples of cells with diverse channel characteristics include residential cells with predominance of low-speed user equipment, highway cells with a high number of high-speed UEs, rural cells with a high degree of line-of-sight, and urban cells with frequent multipath fading due to high building density, as shown in Figure 5.

Figure 6 shows the life cycle of OSG-AI from data collection to model update and inference when estimating “Doppler,” one of the wireless channel characteristics. Before on-site data collection, a generally pre-trained neural network is applied to cover all environments from low speed to high speed neural network. OSG-AI stores latent extracted from the received signal necessary to estimate wireless channel characteristics, the estimated channel characteristic value, and the reliability of the estimated channel characteristic value in the on-site buffer. After the necessary data collection is completed, the collected data is used to train the OSG-AI by a GAN (Generative Adversarial Network) method. After training is completed, the OSG-AI is applied to estimate Doppler and improve estimation performance.

Figure 6. An illustration of On-Site Generative AI learning and inference

The OSG-AI can estimate wireless channel characteristics even in weak fields by reflecting the distribution of wireless characteristics for each cell through on-site data collection and generative training. Figure 7 shows the performance of signal processing-based non-AI algorithms, pre-trained AI, and OSG-AI at 500Hz Doppler in cases where the SNR is 10dB and -10dB.

Figure 7. OSG-AI Doppler estimation performance

In the case of OSG-AI, which has learned the distribution of Doppler in the cell through data collection, it can be seen that Doppler estimation is stably performed even in weak fields. OSG-AI that has learned the statistical distribution of channel characteristics observed at the cell site can enable performance improvements beyond the limitation of traditional estimation approaches that rely solely on instantaneous received signals.

AI Validity Check of Received Signals

Beyond DMRS-based CSI estimation, uplink reliability also depends on the ability to detect abnormal transmission behaviors and anomalies after signal decoding. Unlike the previous subsections, which focus on estimating channel states, this subsection addresses signal validation based on decoding outcomes, where AI-based classifiers play a crucial role in detecting transmission abnormalities such as discontinuous transmission (DTX) and unintended transmission (UTX).

AI-based classification techniques are integral to decision-making processes in cellular communication systems, particularly in determining the validity of modem operations. Under normal circumstances, the transmitter and receiver establish a well-aligned communication session. However, disruptions caused by conditions such as DTX and UTX often hinder reliable communication.

Failure to identify and address these anomalies can lead to significant errors and inefficiencies in higher-layer processes. Therefore, robust signal validation methods are essential to ensure reliable operations in modern cellular systems.

Cyclic redundancy check (CRC) codes are a standard tool for error detection. However, their application can compromise transmission efficiency, especially for small message blocks. In 4G and 5G cellular systems, small control information blocks are often transmitted without CRC encoding, necessitating alternative methods to validate decoding results effectively.

Channel coding, a foundational component of communication systems, defines valid bit sequences through parity-check equations. Historically, signal validation has relied on soft metrics generated by channel decoders, with the accuracy of decoded results assessed against predefined thresholds. However, this threshold-based approach is impractical in real-world systems, where thresholds must be uniquely determined for a vast array of communication scheduling configurations. Moreover, it lacks the flexibility to adapt to diverse communication scenarios.

Figure 8. An illustration of the AI-based approach for solving the signal validation problem

To overcome these limitations, we propose an innovative framework [2] that combines domain knowledge in channel coding with emerging AI/ML methodologies, as shown in Figure 8. This hybrid approach addresses challenges that conventional bounded distance decoding cannot resolve. The solution introduces a novel metric, termed discrepancy, which quantifies the disparity between the decoded result and the received signal. By leveraging this metric, an AI-driven binary classification framework is developed to determine the validity of decoding results. The key features of the proposed framework include:

When applied to small block-length coding schemes in 5G NR that exclude CRC concatenation, the proposed method demonstrates significant improvements in the precision-recall tradeoff for determining the underlying transmission scenario. Our evaluation focuses on the tradeoff between the false positive rate (FPR) and the false negative rate (FNR), derived from confusion matrices for each trained model. The binary classifier outputs a score between 0 and 1, allowing dynamic adjustments to the tradeoff by modifying the score threshold.

Figure 9. Performance of AI classifier for signal validation problem

As illustrated in Figure 9, the proposed AI-based binary classification model consistently outperforms conventional gradient boosting models. The results show a tenfold reduction in both FPR and FNR, underscoring the effectiveness of the AI-driven approach in achieving reliable signal validation under diverse communication scenarios.

These results demonstrate the transformative potential of AI-driven classification techniques in addressing long-standing challenges in signal validation. By providing a scalable and flexible solution, this approach paves the way for more reliable and efficient operations in next-generation cellular networks.

4. Conclusion

This article introduced AI-based techniques for enhancing both downlink and uplink performance in wireless communication systems. For downlink transmission, AI-based channel prediction method was discussed, focusing on supporting scheduling and beamforming. In uplink transmission, AI was applied not only to enhance DMRS-based channel estimation but also to build environment-adaptive channel models and improve signal validation through anomaly detection. Simulation results confirmed that AI-powered solutions offer significant performance gains in terms of estimation accuracy, reliability, and computational efficiency. The discussed methods demonstrate the potential of AI as a key enabler for future wireless communication systems, driving improvements in throughput, reliability, and adaptability.

References

[1] Y. Li, Y. Hu, K. Min. H. Park, H. Yang, T. Wang, J. Sung, J.-Y. Seol, C. J. Zhang, “Artificial intelligence augmentation for channel state information in 5G and 6G,” IEEE Wireless Communications, vol. 30, no. 1, pp. 104-110, Feb. 2023.

[2] M. Jang, D. Bahn, J. Lee, J. W. Kang, S.-H. Kim, and K. Yang, “Learning of discrepancy to validate decoding results with error-correcting codes,” Proc. IEEE Int. Commun. Conf. (ICC), June 2024.