Communications

6G AI/ML for Physical-layer: Part I - General Views

Introduction

Figure 1. AI enabled modem

For decades, the wireless communication problems have been modeled by statistical models. Consequently, the physical layer of wireless communication systems has relied on linear signal processing techniques. Channel estimation methods such as Least Squares (LS) and Linear Minimum Mean Square Error (LMMSE), linear equalizers, and precoding techniques like Zero-Forcing (ZF) have provided a reliable foundation for 3G, 4G, and early 5G systems. Such linear techniques result in low complexity and near-optimum solutions.

However, the upcoming 6G landscape introduces scenarios that challenge the very assumptions underlying such statistical models:

Why data-driven learning in wireless communication?

The above limitations demonstrate the need for a more flexible, learning-based physical layer. Here, artificial intelligence (AI) and machine learning (ML) solutions are attractive as they do not require a priori statistical models. Instead, AI-based solutions learn the intrinsic features and specific nuances of contexts directly from the data. In this regard, wireless communication systems offer a unique opportunity for the proliferation of AI/ML solutions, as both the network and UEs naturally collect a large volume of training data through measurements. Traditional solutions may still serve as baselines, but they must now coexist or, gradually, be replaced by self-intelligent alternatives that are capable of real-time adaptation.

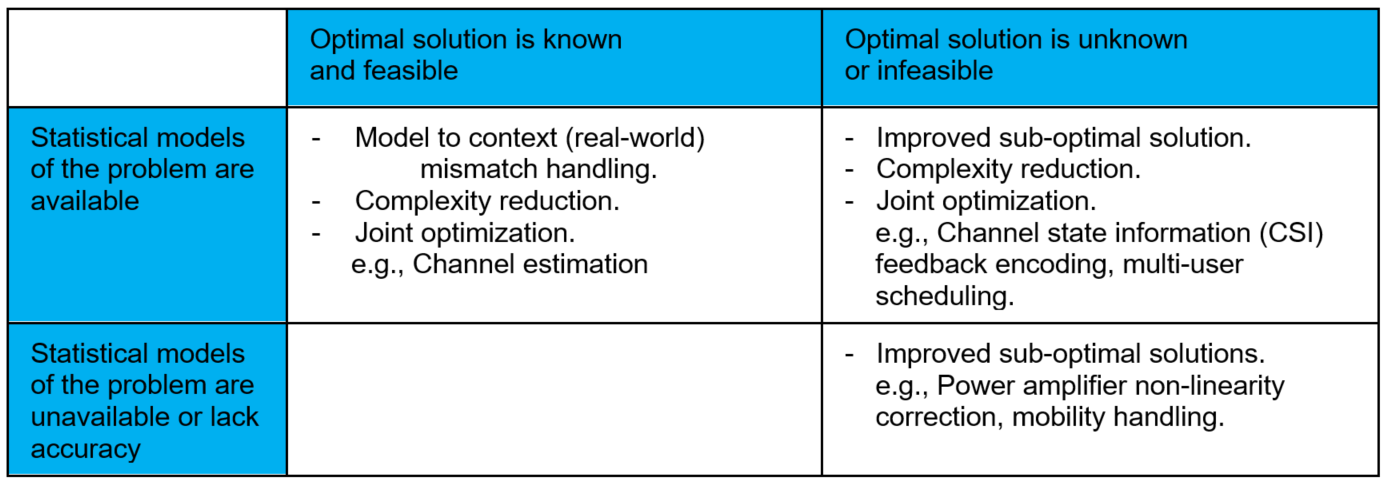

Table 1. The potential role of AI/ML in improving the wireless communication systems

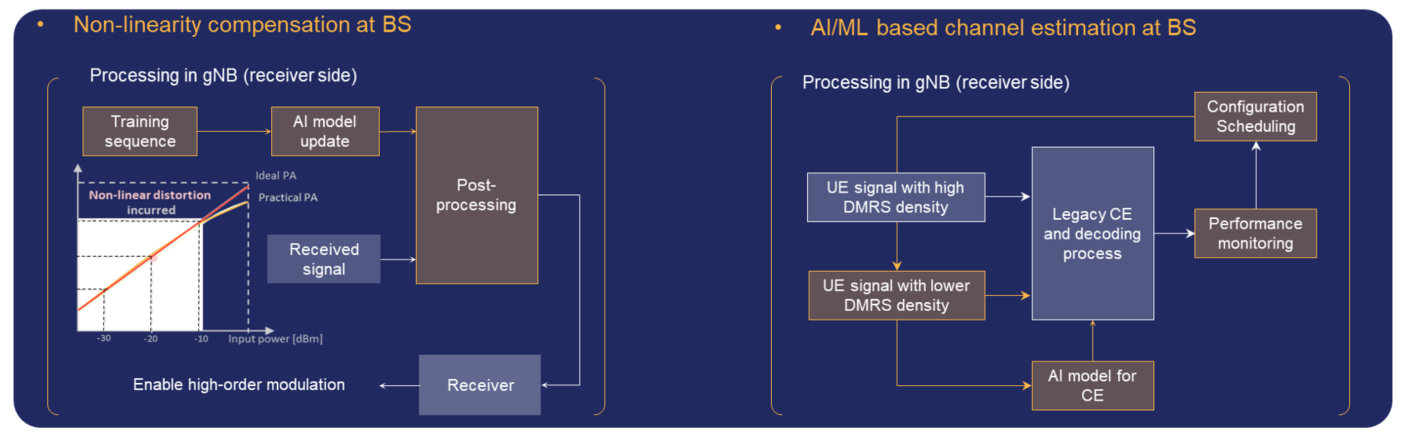

Table 1 summarizes the potential roles of AI/ML in improving the wireless communication systems. In wireless communication problems that are well characterized by generic statistical models, AI/ML solutions provide improved performance or reduced complexity through context-aware adaptation and joint optimization. When statistical models are unavailable or insufficiently accurate, AI/ML approaches enable practical sub-optimal solutions through data-driven learning. For example, UE power amplifier non-linearities are highly implementation-specific hindering unified statistical modeling.

From 5G to Now

Within 3GPP, the application of AI/ML in the physical layer is a relatively recent endeavor. While early 5G releases explored AI/ML in network management and RAN optimization, until recently, the PHY layer remained untouched due to its stringent latency, complexity, and interpretability requirements.

Starting in Release 18, this began to change. Based on study, three different model usages are discussed. As AI/ML becomes an integral part of the physical layer, it's important to understand the different ways AI/ML models can be deployed between the network and the user device. There are three primary approaches: two-sided, network-sided, and UE-sided models. Each offers a unique trade-off between performance, complexity, and implementation flexibility.

In a two-sided AI/ML model, the data-driven solution is split across the network and the UE for joint optimization. This end-to-end approach allows the network and the UE to collaboratively perform tasks such as channel feedback encoding and decoding. On the other hand, network-sided AI/ML model places the data-driven solution at the base station. The UE behaves as a traditional device, without any AI/ML inference or model awareness. In a UE-sided AI/ML model, the AI/ML model is embedded in the UE. The UE can make self-intelligent inferences locally. The network- and UE-sided models are two sub-categories of the one-sided model.

Figure 2. Operation scenarios for AI/ML in physical-layer

The RAN1 group has initiated studies and undertaken specification work on AI/ML models for physical-layer functions such as:

6G will be the first standard for real AI-based Physical-layer

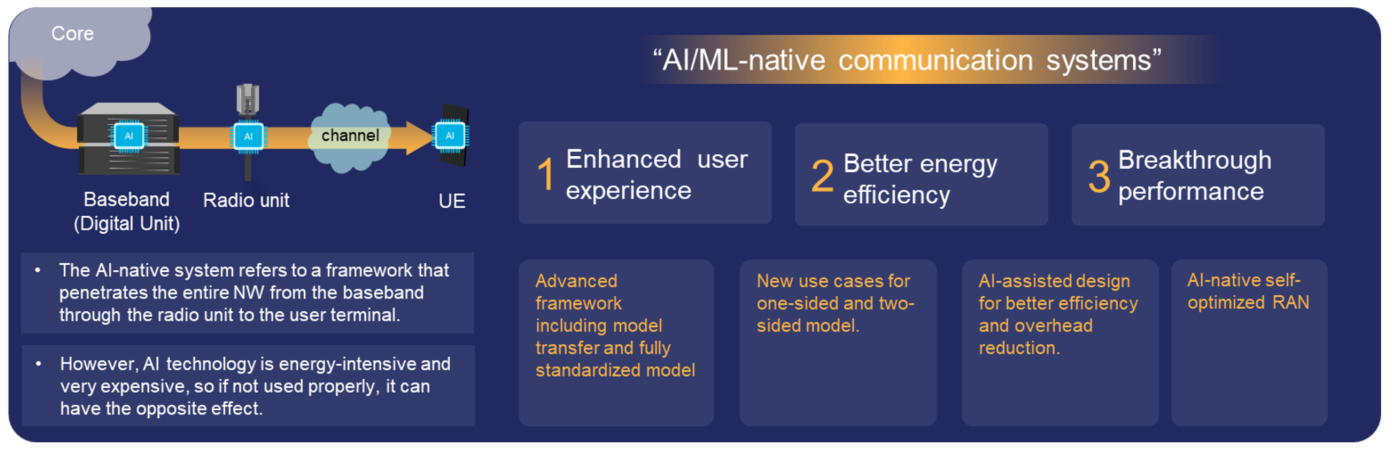

In 6G, AI/ML is not merely an auxiliary optimization tool—it is being positioned as a core enabler of the radio interface itself. The evolving framework reflects a vision where data-driven intelligence is deeply integrated into the fabric of the wireless protocol stack, spanning both the network and the device. Below is a synthesis of the envisioned direction and structural elements for 6G AI/ML.

Figure 3. Vision of AI/ML in 6G

AI-Native RAN Architecture

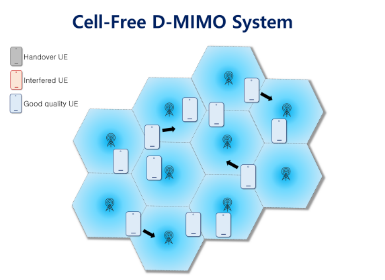

The concept of an AI-native self-optimized RAN is central to the 6G vision, leveraging neural networks, federated learning, and predictive analytics to transcend traditional network paradigms. This entails AI not merely assisting radio resource management (RRM) or scheduling but actively replacing or enhancing core PHY/MAC functions—such as MIMO operations optimization, beamforming control, and adaptive link adaptation—through real-time inference and data-driven decision-making. The system must achieve autonomous self-learning, dynamic self-configuration (e.g., via SON and zero-touch network management), and resilient self-healing across protocol layers. This integration positions AI as a foundational component, enabling end-to-end network orchestration and overcoming conventional air interface limitations through AI/ML-driven innovations like adaptive modulation, non-linear signal processing, and cognitive radio techniques.

Framework for 6G AI/ML

6G advances AI integration in wireless networks by refining deployment paradigms introduced in 5G. Two primary classes, one-sided and two-sided models will be the baseline. Rather than rigid classifications, a spectrum of architectures is emerging that leverage model transfer techniques for adaptable and inter-operable AI solutions. These approaches build upon distributed processing capabilities that facilitate the seamless exchange and adaptation of models between network infrastructure (including edge locations) and user equipment (UE).

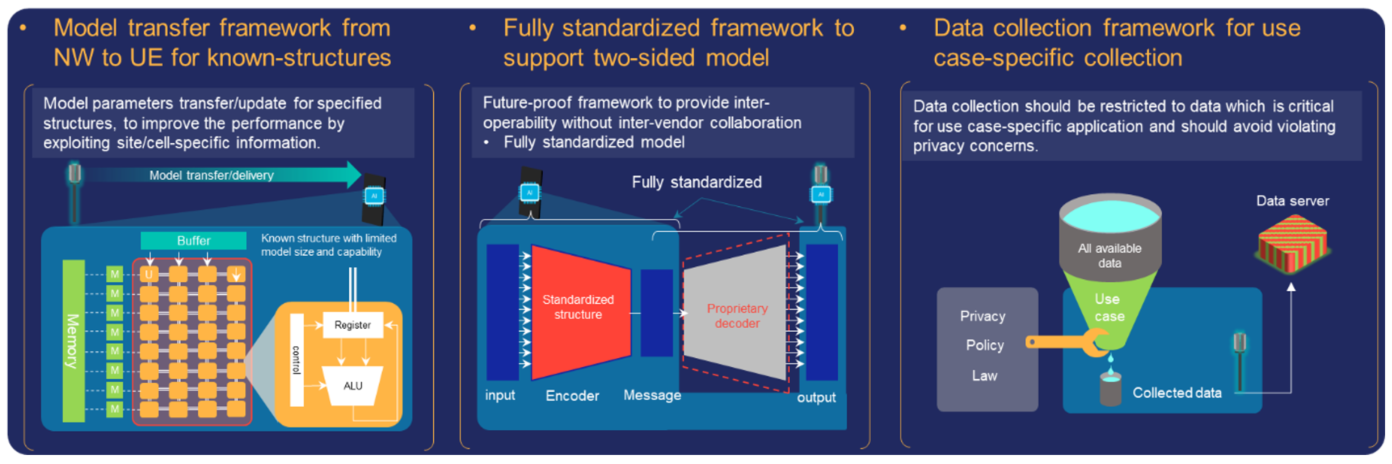

Far more evolved than the 5G NR AI/ML framework, 6G is envisioned to support advanced AI/ML framework. For example, model transfer with specified model structure may enable site/cell-specific models at the UE. Having been optimized to specific features of a certain cell/site, such localized models may provide better performance or lower complexity than generic models. In particular, a UE-side/part of a model can be partitioned into a fixed part, e.g., fully specified reference model, and an adaptable part (layers). The network may then transfer model parameters for adaptation layers. The approach of transferring model parameters for a limited set of adaptation layers, rather than the full model, effectively mitigates high radio overhead and runtime errors that would otherwise necessitates offline optimization by the UE-side vendor. It is to be noted that transfer of cell/site-specific basis or projection matrices can be considered as linear adaptation which is parallel to model parameters transfer, i.e., non-linear counterpart. This framework can be considered for tasks like CSI compression, where explicit feedback is optimized to significantly reduce overhead compared to conventional codebook-based feedback methods.

In the two-sided model introduced in 5G, standardization becomes paramount for practical implementation in 6G. To accommodate diverse hardware capabilities and interoperability without sacrificing performance, standardized reference models are necessary. The standardized reference model provides a common guidance in the input-output mapping. This enables vendors to train inter-operable models which can also be optimized to the vendors’ respective preferences. The vendors may employ collected field data to optimize their respective models in relation to the relevant additional conditions, e.g., deployment scenarios, antenna layout, and/or model implementation aspects, e.g., complexity and model backbone. As an example, lower complexity is often achieved through techniques like knowledge distillation, quantization and pruning. Standardizing such common reference models is also crucial for ensuring seamless interoperability and integration among various vendors with different proprietary components inherent in their implementations. Furthermore, adhering to minimum performance requirements across various UE and network implementations necessitates a baseline level of consistency enforced by standardized models, ultimately enabling widespread deployment and maximizing the benefits of collaborative AI/ML within the 6G ecosystem. Optionally, network vendors may share information on vendor-specific reference models, e.g., encoder parameters/dataset sharing based approaches. In these approaches, the network may have the freedom in initializing the training process without the constraints of predefined mapping imposed by the reference model- however, these approaches require the UE to implement multiple encoders to interoperate with multiple network vendors.

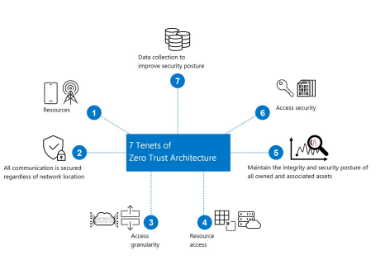

Data Collection with Privacy Constraints

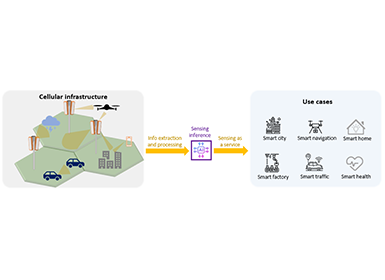

AI models require real-world data, but 6G must respect privacy, legal, and policy frameworks. Thus, only use-case-specific, purpose-limited data should be collected. Sensitive user data must remain protected, possibly through federated learning or edge-based inference. A common data structure is proposed to support AI, sensing, QoE tracking, and traceability within one cohesive MDT framework.

To successfully embed AI into the radio stack, 6G design should pursue such as moving AI from a peripheral tool to a core part of radio interface logic, combining model-based and data-driven paradigms, supporting lightweight, adaptive, and distributed AI models, and enabling hybrid operation: AI-inferred decisions where possible, traditional signaling where needed.

This direction signals a profound shift from deterministic, rule-based system design toward context-based, self-evolving network intelligence.

Figure 4. Design principles for 6G AI/ML

6G use cases for AI/ML

Two-sided model use cases: In 6G, the two-sided AI model refers to a collaborative framework where both the network and the UE operate on a shared AI-trained representation—most notably for tasks like CSI feedback and precoding. This coordinated structure enables highly efficient compression and improved accuracy compared to traditional signaling methods. The following are the key use cases:

NW-side model use cases: these refer to scenarios where the data-driven intelligence is fully located at the network, without requiring any AI model coordination or execution at the UE. These models are particularly attractive because they reduce device complexity and power consumption while still enabling advanced PHY-layer functionalities through intelligent inference at the network side. Here, network-side data collection simplifies site/cell-specific deployment, unlike UE-side models that require frequent model switching due to user mobility. Some of the envisioned use cases for NW-side models are outlined below:

UE-sided model use cases: UE-sided AI models refer to cases where intelligence is embedded in the UE, thereby enabling local inference and decision-making based on UE’s conditions. One advantage of UE-side inference is UE’s access to high-resolution measurements from downlink reference resources that are less constrained by coverage limitations and quantization error. Additional key use cases are presented below

AI-receiver: the physical layer must handle increasingly complex and non-linear environments, where traditional receivers such as LMMSE and MMSE-SIC reach their performance limits. The AI receiver emerges as a key innovation to overcome these challenges by leveraging deep learning models to enhance signal detection and channel estimation in difficult scenarios.

Specifically, AI receivers are designed to:

In the sequels of this blog, we will elaborate on the details of use cases we are hoping to use in 6G.

Conclusion

The paradigm motivated by statistical modeling of the wireless communication system has been a faithful companion in its evolution, but it is approaching its practical limits. As 6G aims to support ultra-dense networks, massive-scale antenna arrays, extreme mobility, and integrated sensing, a new foundation is needed.

AI/ML offers a path forward by enabling a physical layer that is context-aware, predictive, and adaptive through data-driven learning. This transformation is already underway in 3GPP, where physical layer studies are now incorporating deep learning models into critical measurement, feedback and estimation procedures. To ensure that this vision comes true, a paradigm shift is essential – not only in algorithm design, but also in architecture, standardization of model interfaces, development of lightweight inference engines, and perhaps most critically, a rethinking of the PHY layer’s role as not just a signal processor—but an intelligent agent in the network.

The 6G physical layer will no longer serve as a bit delivery engine, but it will also learn, adapt, decide, and, furthermore, “think”. That is the promise of AI-native PHY.