Samsung R&D Institute China-Nanjing Ranks 3rd at DCASE2025 Challenge

Samsung R&D Institute China-Nanjing (SRC-N) has once again secured a top ranking in the Detection and Classification of Acoustic Scenes and Events (DCASE) Challenge this year.

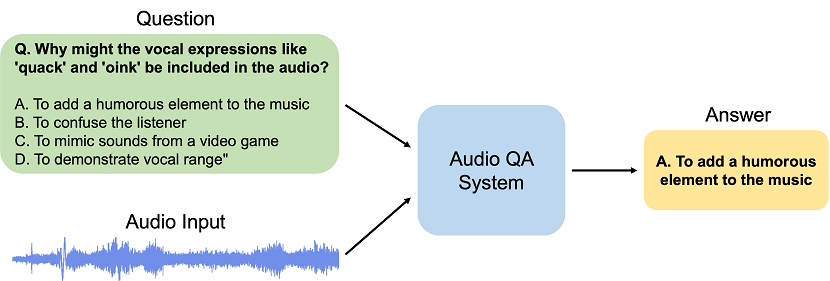

Organized by the Institute of Electrical and Electronics Engineers Audio and Acoustic Signal Processing (IEEE AASP), DCASE is a premier competition in the acoustics field that attracts hundreds of industry and academia teams annually. This year, six tasks have been set, including the newest, Audio Question Answering (AQA). The evaluation continues to support the development of computational scene and event analysis methods by comparing different approaches using a common publicly available dataset. This continuous effort establishes the milestones of development and anchors the current performance for further reference.

Following their success in the last two DCASE Challenges (placing second at Task 4B and third at Task 4A in 2023, and ranking first at Task 7 and third at Task 8 in 2024), the SRC-N developers took on a new challenge this year. The team won third place in the AQA task, further enhancing the research center’s technical expertise in AI-based acoustic signal processing.

The AQA task centers around advancing question-answering capabilities in the field of “interactive audio understanding,” covering both general acoustic events and knowledge-heavy sound information within a single track. This task encourages participants to create systems that can accurately interpret and respond to complex audio-based questions, requiring the systems to be able to understand multimodal inputs from not only user queries based on natural language but also the audio signal carrying an array of information and knowledge. The team submitted systems consisting of an audio encoder (Whisper v3), a language model (Qwen2/2.5), and a projector in between. The systems were then post-trained with a large, finely processed audio-text dataset (about 800,000 samples) and different learning methods (supervised fine-tuning and reinforcement learning based on Group Relative Policy Optimization [GRPO]) at varying stages.

The developers had another interesting finding. They chose two different Large Multimodal Models (Qwen2-Audio and Qwen2.5-Omni) as the base models to initialize the weights of the corresponding components. The team observed that the fine-tuned systems based on Qwen2.5-Omni significantly outperformed the ones based on Qwen2-Audio. Notably, the organizers recorded this finding by publishing an “Advanced Study” section on the results page for easier research reference and future study.

SRC-N’s AI Software Team aims to research and develop top-level AI technologies, utilize them on devices to offer product innovation, and create new value. AQA technology will be beneficial in smart applications, including audio-text and multimodal model evaluation, as well as the development of interactive audio-agent models, aligning perfectly with the team’s strategy. In the era of Agentic AI, the team believes this cutting-edge technology will enhance the user experience for AI agents. As such, SRC-N will actively pursue opportunities to implement this award-winning solution to provide brand-new AI experiences for the next generation of Samsung products.