Open Source

Embedded IoT 2021 - Intro of the research insight from the presentation based on LF Edge HomeEdge

Introduction

Every home is flooded with consumer electronic devices which have capability to connect to internet. These devices can be accessed from anywhere in the world and thus making the home smart. As per a survey the size of smart home market would be $6 Billion by 2022. As per Gartner report 80% of all the IoT projects to include AI as a major component.

In conventional cloud based setup following two things need to be considered with utmost importance:

Latency – As per study there is latency of 0.82ms for every 100 miles travelled by data

Data Privacy – Average total cost of data breach is $3.92 Million

With devices of heterogeneous hardware capabilities the processing which were traditionally performed at cloud are being moved to devices if possible as shown below.

These lead us to work towards developing an edge platform for smart home system. As an initial step the Edge Orchestration project was developed under the umbrella of LF Edge.

Before getting into the details of Edge orchestration, let us understand few terms commonly used in this.

Edge computing - a distributed computing paradigm that brings computation and data storage closer to the location where it is needed, to improve response times and save bandwidth

Devices : Our phones, tablets, smart TVs and so that are now a part of our homes

Inferencing - act or process of reaching a conclusion from known facts.

ML perspective - act of taking decisions / giving predictions / aiding the user based on a pre trained model.

Home Edge – Target

Home Edge project defines uses cases, technical requirements for smart home platform. The project aims to develop and maintain features and APIs catering to the above needs in a manner of open source collaboration. The minimum viable features of the platform are below:

Dynamic device/service discovery at “Home Edge”

Service Offloading

Quality of Service guarantee in various dynamic conditions

Distributed Machine Learning

Multi-vendor Interoperability

User privacy

Edge Orchestration targets to achieve easy connection and efficient resource utilization among Edge devices. Currently REST based device discovery and service offloading is supported. Score is calculated based on resource information (CPU/Memory/Network/Context) by all the edge devices. The score is used for selecting the device for service offloading when more than one device offer same service. Home Edge also supports Multi Edge Communication for multi NAT device discovery.

Problem Statement

As mentioned above the service offloading is based on score of all the devices. Score is in-turn is calculated using CPU/Memory/Network/Context. These values are populated in the db initially when home edge is installed in the device. And hence these are more static values.

Static Score is a function of

Number of CPU cores in a machine

Bandwidth of each CPU core

Network bandwidth

Round trip time in communication between devices

For example say at time t1, device B has available memory ‘X’ and hence this value is stored in db. Say at time t2 device A requests device B for score and at t2 the available memory in device B is ‘X-Y’. But the score is calculated using ‘X’ which has been stored initially.

Say in another scenario device A requests device B for service offloading. Device B starts executing the service. But say in the mid of execution moves away from the network. In such cases it would be unsuccessful offloading.

We could see in the above situations that the main purpose of Home Edge is gone for a task.

And these lead to the proposal of dynamic score calculation which is more effective and efficient.

Properties of a home based Distributed Inferencing System

We propose an effective method for score calculation. The proposed method for score calculation is based on following points:

1. Model Specific Device Selection

2. Efficient Data Distribution

3. Efficient Churn Prediction

4. Non Rigid and Self Improving

Based on the above four properties we defined a feedback based system that can help us achieve optimal data distribution for parallel inferencing in a heterogeneous system.

High Level System

Following diagram shows the high level architecture of the system. Let us take a use case and see the flow of tasks. For example when a user queries to Speaker “Let me know is any visitors today?”. The User query is indigested by the query manager to understand the query. The user query is to identify the number of visitors who visited today. The Data Source needs to be identified for query execution say the Doorbell Camera/Backyard CCTV. Then the model required for this purpose is identified and the devices which have the service (model). Based on the past performance, static score, trust of the device and number times previously the model has ran on the device, the devices are selected. Priority of the devices are calculated and based on the same the data is split among the devices. The result from the devices are aggregated to calculate the final output.

Understanding Score

Total Score is a function of Static score and Memory usage of the device

Changes in Score can help us predict estimated runtimes at the current time

Understanding Time Estimates

Time Estimates are calculated based on previous time records, average and current score

Data Points which are stored :

1. Average Score

2. Average time taken per inference

3. Number of times a model has been deployed on a device

4. Success probability of a model running on a device – Trust

Estimating runtime on Devices

Runtime estimation is done post sufficient data points being collected in the system

Delta Factor - A liner relationship factor to approximate the change in runtimes when score changes is calculated

Estimated time - using the Delta factor, Average Score and Average Runtime and the Current score of the device

Understanding Churn Probability Estimation

Logical buckets for every 5 minute frames is created to know the availability of devices

In case a bucket is polling a device for nth time and the was available k times before the new probability would be

1. (K+1)/(n+1), if device is available

2. K/(n+1), if device unavailable

Calculating Priority

Post estimation of runtimes, The probability of devices moving out of the system are checked and devices whose probability falls low are not kept in the consideration list

All devices in the consideration list are sorted based on the product of the trust and inverse of runtimes

Top K devices out of N are selected and data is distributed among them

Data Distribution

Data on the devices is distributed in the inverse ratio of the estimated runtimes to minimize the total time the system takes to make the complete inference

Feedback

Post execution the actual runtimes and scores of the devices are used to adjust the average in the databases

In case of failure next device is selected and the trust value of the device is adjusted in database

Test Run on Simulator

Aim – To demonstrate heuristic based data distribution among devices

To Test – Note the devices that work best for a model, checking the selection irrespective of device scores

Web based simulator was developed to demonstrate the proposed method. Following is a sample screenshot of the simulator

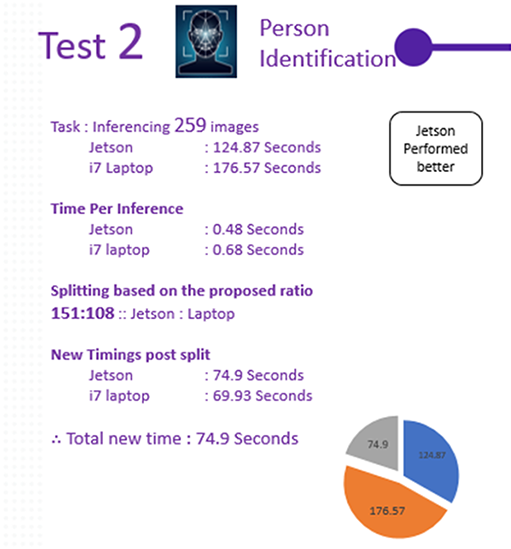

In the due course of our work we also found few models execute better in specific devices. To check up on this we had run two use case – Camera Anomaly detection and Person identification. And we found that the models used in both the scenarios gave better execution results in different devices as shown below.